What is Concurrent Programming?

TL;DR

We have defined concurrent programming informally, based upon your experience with computer systems. Our goal is to study concurrency abstractly, rather than a particular implementation in a specific programming language or operating system. We have to carefully specify the abstraction that describe the allowable data structures and operations. In the next chapter, we will define the concurrent programming abstraction and justify its relevance. We will also survey languages and systems that can be used to write concurrent programs.

Introduction

An ordinary program consists of data declarations and assignment and control-flow

statements in a programming language. Modern languages include structures

such as procedures and modules for organizing large software systems through

abstraction and encapsulation, but the statements that are actually executed are still

the elementary statements that compute expressions, move data and change the

flow of control. In fact, these are precisely the instructions that appear in the machine

code that results from compilation. These machine instructions are executed

sequentially on a computer and access data stored in the main or secondary memories.

A concurrent program is a set of sequential programs that can be executed in parallel. We use the word process for the sequential programs that comprise a concurrent program and save the term program for this set of processes.

Traditionally, the word parallel is used for systems in which the executions of several programs overlap in time by running them on separate processors. The word concurrent is reserved for potential parallelism, in which the executions may, but need not, overlap; instead, the parallelism may only be apparent since it may be implemented by sharing the resources of a small number of processors, often only one. Concurrency is an extremely useful abstraction because we can better understand such a program by pretending that all processes are being executed in parallel. Conversely, even if the processes of a concurrent program are actually executed in parallel on several processors, understanding its behavior is greatly facilitated if we impose an order on the instructions that is compatible with shared execution on a single processor. Like any abstraction, concurrent programming is important because the behavior of a wide range of real systems can be modeled and studied without unnecessary detail.

In this book we will define formal models of concurrent programs and study algorithms written in these formalisms. Because the processes that comprise a concurrent program may interact, it is exceedingly difficult to write a correct program for even the simplest problem. New tools are needed to specify, program and verify these programs. Unless these are understood, a programmer used to writing and testing sequential programs will be totally mystified by the bizarre behavior that a concurrent program can exhibit.

Concurrent programming arose from problems encountered in creating real systems. To motivate the concurrency abstraction, we present a series of examples of real-world concurrency.

Concurrency as abstract parallelism

It is difficult to intuitively grasp the speed of electronic devices. The fingers of a fast typist seem to fly across the keyboard, to say nothing of the impression of speed given by a printer that is capable of producing a page with thousands of characters every few seconds. Yet these rates are extremely slow compared to the time required by a computer to process each character.

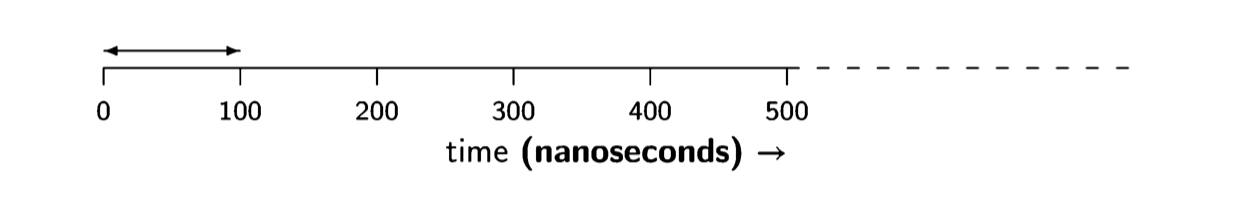

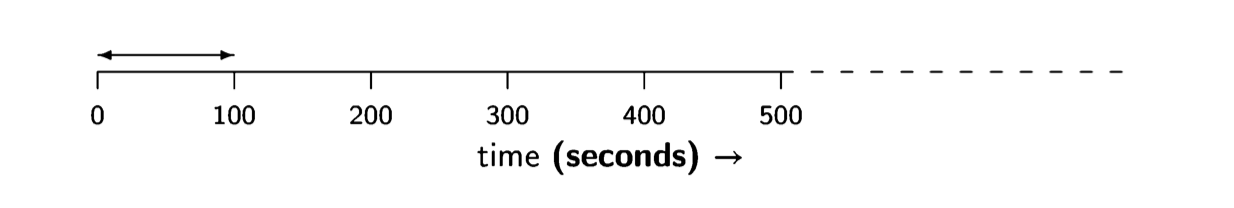

As I write, the clock speed of the central processing unit (CPU) of a personal computer is of the order of magnitude of one gigahertz (one billion times a second). That is, every nanosecond (one-billionth of a second), the hardware clock ticks and the circuitry of the CPU performs some operation. Let us roughly estimate that it takes ten clock ticks to execute one machine language instruction, and ten instructions to process a character, so the computer can process the character you typed in one hundred nanoseconds, that is 0.0000001 of a second:

To get an intuitive idea of how much effort is required on the part of the CPU, let us pretend that we are processing the character by hand. Clearly, we do not consciously perform operations on the scale of nanoseconds, so we will multiply the time scale by one billion so that every clock tick becomes a second:

Thus we need to perform 100 seconds of work out of every billion seconds. How much is a billion seconds? Since there are 60 x 60 x 24 = 86,400 seconds in a day, a billion seconds is 1,000,000,000/86,400 = 11,574 days or about 32 years. You would have to invest 100 seconds every 32 years to process a character, and a 3,000-character page would require only (3,000 x 100)/(60 x 60) ~ 83 hours over half a lifetime. This is hardly a strenuous job!

The tremendous gap between the speeds of human and mechanical processing on the one hand and the speed of electronic devices on the other led to the develop- ment of operating systems which allow I/O operations to proceed “in parallel” with computation. On a single CPU, like the one in a personal computer, the processing required for each character typed on a keyboard cannot really be done in parallel with another computation, but it is possible to “steal” from the other computation the fraction of a microsecond needed to process the character. As can be seen from the numbers in the previous paragraph, the degradation in performance will not be noticeable, even when the overhead of switching between the two computations is included.

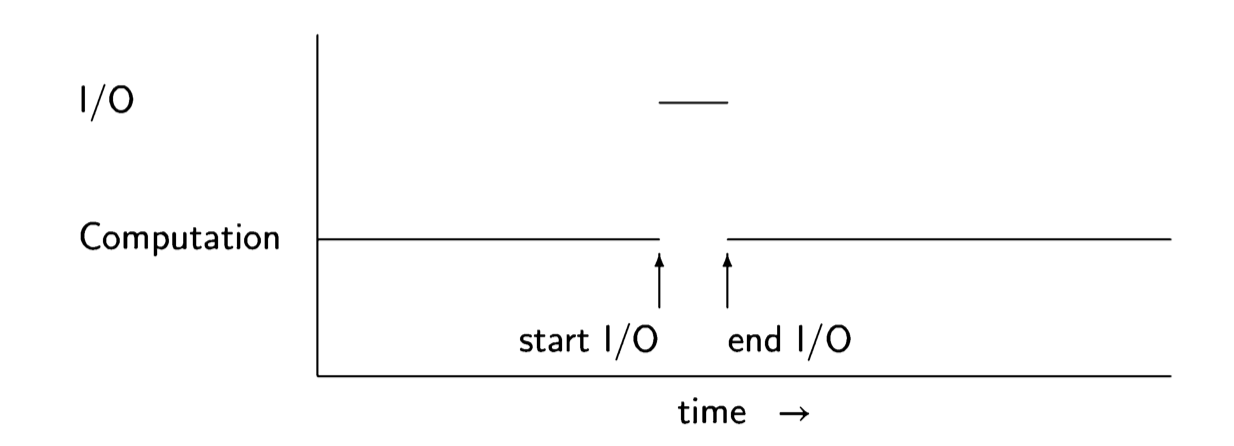

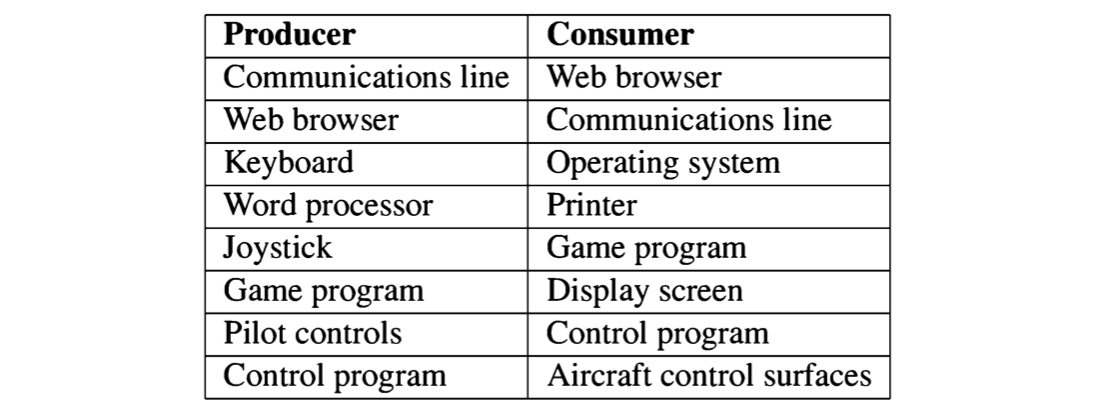

What is the connection between concurrency and operating systems that overlap I/O with other computations? It would theoretically be possible for every program to include code that would periodically sample the keyboard and the printer to see if they need to be serviced, but this would be an intolerable burden on programmers by forcing them to be fully conversant with the details of the operating system. In- stead, I/O devices are designed to interrupt the CPU, causing it to jump to the code to process a character. Although the processing is sequential, it is conceptually simpler to work with an abstraction in which the I/O processing performed as the result of the interrupt is a separate process, executed concurrently with a process doing another computation. The following diagram shows the assignment of the CPU to the two processes for computation and I/O.

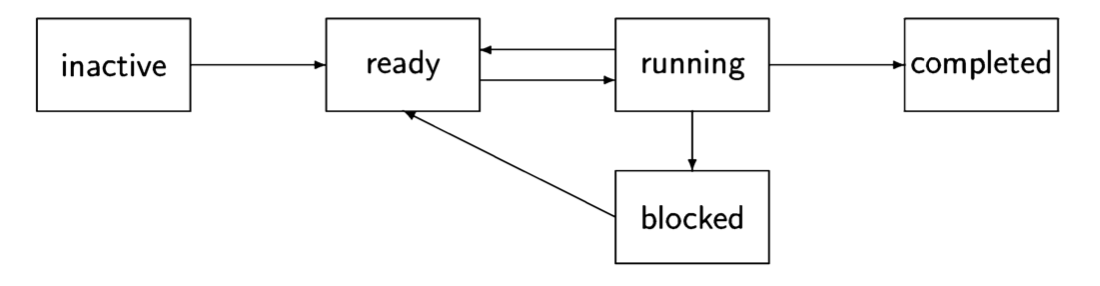

Multitasking

Multitasking is a simple generalization from the concept of overlapping I/O with a computation to overlapping the computation of one program with that of another. Multitasking is the central function of the kernel of all modern operating systems. A scheduler program is run by the operating system to determine which process should be allowed to run for the next interval of time. The scheduler can take into account priority considerations, and usually implements time-slicing, where com- putations are periodically interrupted to allow a fair sharing of the computational resources, in particular, of the CPU. You are intimately familiar with multitask- ing; it enables you to write a document on a word processor while printing another document and simultaneously downloading a file.

Multitasking has become so useful that modern programming languages support it within programs by providing constructs for multithreading. Threads enable the programmer to write concurrent (conceptually parallel) computations within a single program. For example, interactive programs contain a separate thread for handling events associated with the user interface that is run concurrently with the main thread of the computation. It is multithreading that enables you to move the mouse cursor while a program is performing a computation.

Multiple computers

The days of one large computer serving an entire organization are long gone. To- day, computers hide in unforeseen places like automobiles and cameras. In fact, your personal “computer” (in the singular) contains more than one processor: the graphics processor is a computer specialized for the task of taking information from the computer’s memory and rendering it on the display screen. I/O and commu- nications interfaces are also likely to have their own specialized processors. Thus, in addition to the multitasking performed by the operating systems kernel, parallel processing is being carried out by these specialized processors.

The use of multiple computers is also essential when the computational task re- quires more processing than is possible on one computer. Perhaps you have seen pictures of the “server farms” containing tens or hundreds of computers that are used by Internet companies to provide service to millions of customers. In fact, the entire Internet can be considered to be one distributed system working to dissemi- nate information in the form of email and web pages.

Somewhat less familiar than distributed systems are multiprocessors, which are systems designed to bring the computing power of several processors to work in concert on a single computationally-intensive problem. Multiprocessors are exten- sively used in scientific and engineering simulation, for example, in simulating the atmosphere for weather forecasting and studying climate.

The challenge of concurrent programming

The challenge in concurrent programming comes from the need to synchronize the execution of different processes and to enable them to communicate. If the processes were totally independent, the implementation of concurrency would only require a simple scheduler to allocate resources among them. But if an I/O process accepts a character typed on a keyboard, it must somehow communicate it to the process running the word processor, and if there are multiple windows on a display, processes must somehow synchronize access to the display so that images are sent to the window with the current focus.

It turns out to be extremely difficult to implement safe and efficient synchronization

and communication. When your personal computer freezes up or when using one

application causes another application to crash, the cause is generally an error in

synchronization or communication. Since such problems are time and situation

dependent, they are difficult to reproduce, diagnose and correct.

The aim of this book is to introduce you to the constructs, algorithms and systems that are used to obtain correct behavior of concurrent and distributed programs. The choice of construct, algorithm or system depends critically on assumptions concerning the requirements of the software being developed and the architecture of the system that will be used to execute it. This book presents a survey of the main ideas that have been proposed over the years; we hope that it will enable you to analyze, evaluate and employ specific tools that you will encounter in the future.

Transition

We have defined concurrent programming informally, based upon your experience with computer systems. Our goal is to study concurrency abstractly, rather than a particular implementation in a specific programming language or operating system. We have to carefully specify the abstraction that describe the allowable data structures and operations. In the next chapter, we will define the concurrent programming abstraction and justify its relevance. We will also survey languages and systems that can be used to write concurrent programs.

The Concurrent Programming Abstraction

TL;DR

This chapter has presented and justified the concurrent program abstraction as the interleaved execution of atomic statements. The specification of atomic statements is a basic task in the definition of a concurrent system; they can be machine instructions or higher-order statements that are executed atomically. We have also shown how concurrent programs can be written and executed using a concurrency simulator (Pascal and C in BACD, a programming language (Ada and Java) or simulated with a model checker (Promela in Spin). But we have not actually solved any problems in concurrent programming. The next three chapters will focus on the critical section problem, which is the most basic and important problem to be solved in concurrent programming.

The role of abstraction

Scientific descriptions of the world are based on abstractions. A living animal is

a system constructed of organs, bones and so on. These organs are composed of

cells, which in turn are composed of molecules, which in turn are composed of

atoms, which in turn are composed of elementary particles. Scientists find it

convenient (and in fact necessary) to limit their investigations to one level, or maybe

two levels, and to abstract away from lower levels. Thus your physician will

listen to your heart or look into your eyes, but he will not generally think about the

molecules from which they are composed. There are other specialists, pharmacologists

and biochemists, who study that level of abstraction, in turn abstracting away

from the quantum theory that describes the structure and behavior of the molecules.

In computer science, abstractions are just as important. Software engineers gener- ally deal with at most three levels of abstraction:

Systems and libraries Operating systems and libraries—often called Application Program Interfaces (API)—define computational resources that are available to the programmer. You can open a file or send a message by invoking the proper procedure or function call, without knowing how the resource is im- plemented.

Programming languages A programming language enables you to employ the computational power of a computer, while abstracting away from the details of specific architectures.

Instruction sets Most computer manufacturers design and build families of CPUs which execute the same instruction set as seen by the assembly language programmer or compiler writer. The members of a family may be implemented in totally different ways—emulating some instructions in software or using memory for registers—but a programmer can write a compiler for that instruction set without knowing the details of the implementation.

Of course, the list of abstractions can be continued to include logic gates and their implementation by semiconductors, but software engineers rarely, if ever, need to work at those levels. Certainly, you would never describe the semantics of an assignment statement like \(x\leftarrow y+z\) in terms of the behavior of the electrons within the chip implementing the instruction set into which the statement was compiled.

Two of the most important tools for software abstraction are encapsulation and concurrency.

Encapsulation achieves abstraction by dividing a software module into a public specification and a hidden implementation. The specification describes the available operations on a data structure or real-world model. The detailed implementation of the structure or model is written within a separate module that is not accessible from the outside. Thus changes in the internal data representation and algorithm can be made without affecting the programming of the rest of the system. Modern programming languages directly support encapsulation.

Concurrency is an abstraction that is designed to make it possible to reason about the dynamic behavior of programs. This abstraction will be carefully explained in the rest of this chapter. First we will define the abstraction and then show how to relate it to various computer architectures. For readers who are familiar with machine-language programming, Sections 2.8—2.9 relate the abstraction to machine instructions; the conclusion is that there are no important concepts of concurrency that cannot be explained at the higher level of abstraction, so these sections can be skipped if desired. The chapter concludes with an introduction to concurrent programming in various languages and a supplemental section on a puzzle that may help you understand the concept of state and state diagram.

Concurrent execution as interleaving of atomic statements

We now define the concurrent programming abstraction that we will study in this

textbook. The abstraction is based upon the concept of a (sequential) process,

which we will not formally define. Consider it as a normal program fragment

written in a programming language. You will not be misled if you think of a process

as a fancy name for a procedure or method in an ordinary programming language.

Definition 2.1 A concurrent program consists of a finite set of (sequential) pro- cesses. The processes are written using a finite set of atomic statements. The execution of a concurrent program proceeds by executing a sequence of the atomic statements obtained by arbitrarily interleaving the atomic statements from the pro- cesses. A computation is an execution sequence that can occur as a result of the interleaving. Computations are also called scenarios.

Definition 2.2 During a computation the control pointer of a process indicates the next statement that can be executed by that process. Each process has its own control pointer.

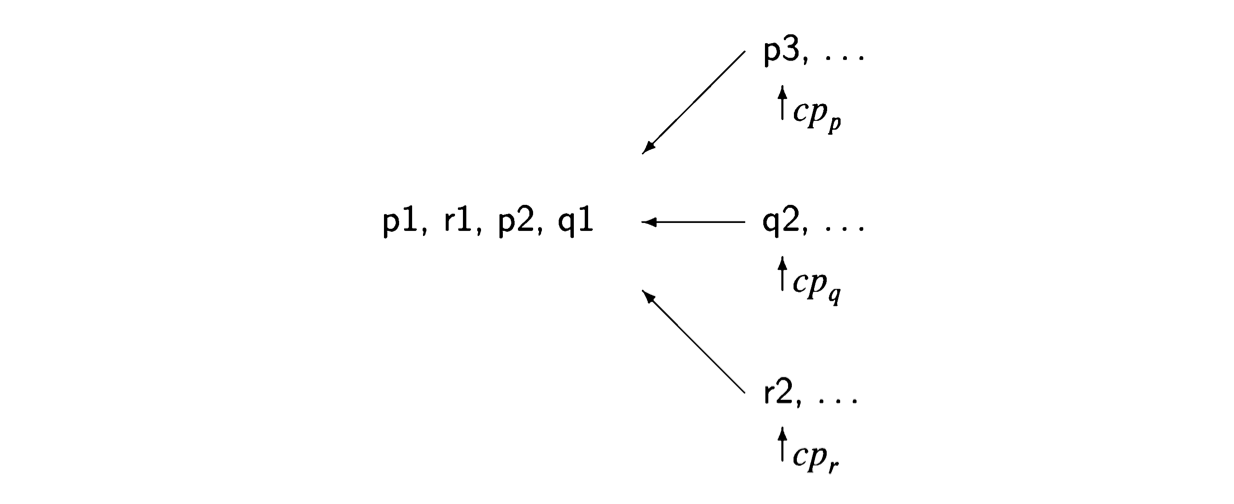

Computations are created by interleaving, which merges several statement streams.

At each step during the execution of the current program, the next statement to be

executed will be chosen from the statements pointed to by the control pointers

cp of the processes.

Suppose that we have two processes, p composed of statements \(p1\) followed by \(p2\) and \(q\) composed of statements \(q1\) followed by \(q2\), and that the execution is started with the control pointers of the two processes pointing to \(p1\) and \(q1\). Assuming that the statements are assignment statements that do not transfer control, the possible scenarios are:

\[ p1 \rightarrow q1 \rightarrow p2 \rightarrow q2, \] \[ p1 \rightarrow q1 \rightarrow q2 \rightarrow p2, \] \[ p1 \rightarrow p2 \rightarrow q1 \rightarrow q2, \] \[ q1 \rightarrow p1 \rightarrow q2 \rightarrow p2, \] \[ q1 \rightarrow p1 \rightarrow p2 \rightarrow q2, \] \[ q1 \rightarrow q2 \rightarrow p1 \rightarrow p2. \]

Note that \(p2 \rightarrow p1 \rightarrow q1 \rightarrow q2 \) is not a scenario, because we respect the sequential execution of each individual process, so that \(p2\) cannot be executed before \(p1\).

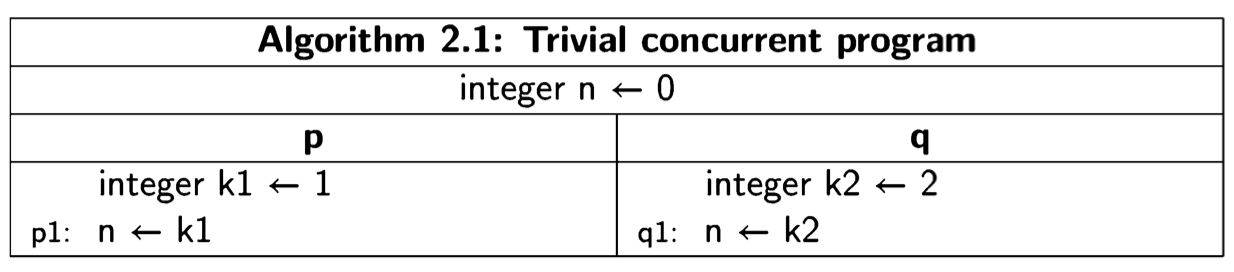

We will present concurrent programs in a language-independent form, because the concepts are universal, whether they are implemented as operating systems calls, directly in programming languages like Ada or Java, or in a model specification language like Promela. The notation is demonstrated by the following trivial two- process concurrent algorithm:

The program is given a title, followed by declarations of global variables, followed by two columns, one for each of the two processes, which by convention are named process p and process q. Each process may have declarations of local variables, followed by the statements of the process. We use the following convention:

States

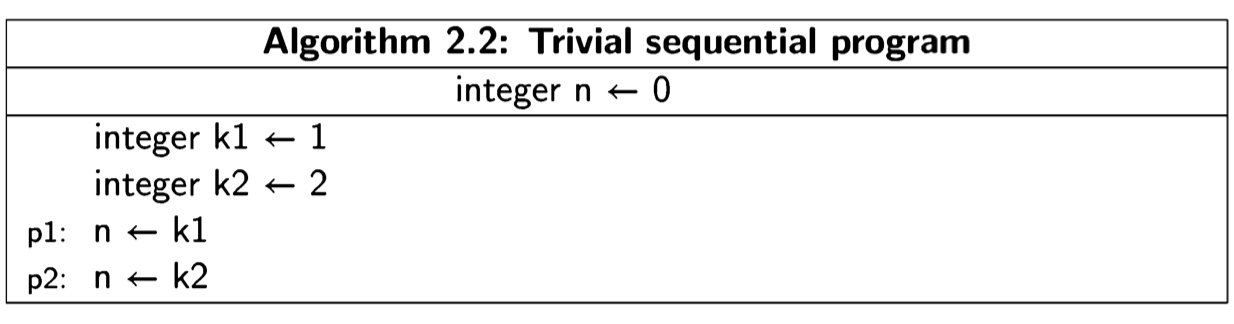

The execution of a concurrent program is defined by states and transitions between states. Let us first look at these concepts in a sequential version of the above algorithm:

At any time during the execution of this program, it must be in a state defined by the value of the control pointer and the values of the three variables. Executing a statement corresponds to making a transition from one state to another. It is clear that this program can be in one of three states: an initial state and two other states obtained by executing the two statements. This is shown in the following diagram, where a node represents a state, arrows represent the transitions, and the initial state is pointed to by the short arrow on the left:

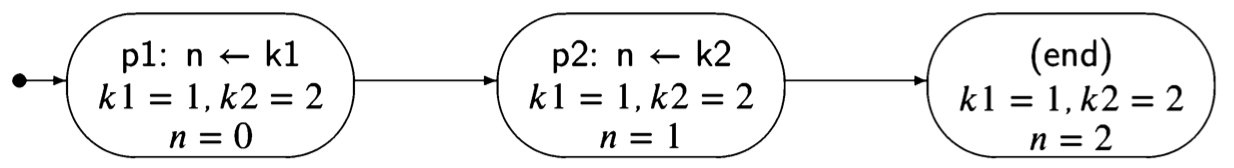

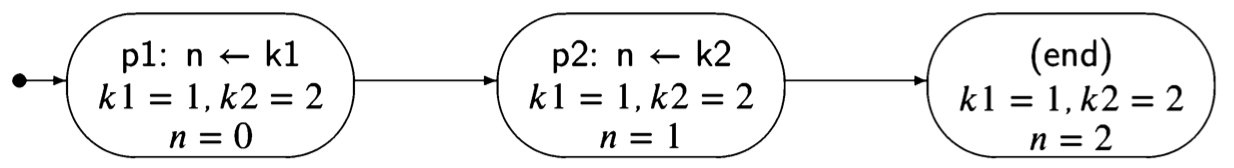

Consider now the trivial concurrent program Algorithm 2.1 There are two pro- cesses, so the state must include the control pointers of both processes. Further- more, in the initial state there is a choice as to which statement to execute, so there are two transitions from the initial state.

The lefthand states correspond to executing p1 followed by q1, while the righthand states correspond to executing q1 followed by pl. Note that the computation can terminate in two different states (with different values of 1), depending on the interleaving of the statements.

Definition 2.3 The state of a (concurrent) algorithm is a tuple consisting of one element for each process that is a label from that process, and one element for each global or local variable that is a value whose type is the same as the type of the variable.

The number of possible states—the number of tuples—is quite large, but in an execution of a program, not all possible states can occur. For example, since no values are assigned to the variables k1 and k2 in Algorithm 2.1, no state can occur in which these variables have values that are different from their initial values.

Definition 2.4 Let \(s_1\) and \(s_2\) be states. There is a transition between \(s_1\), and \(s_2\) if executing a statement in state \(s_1\) changes the state to \(s_2\). The statement executed must be one of those pointed to by a control pointer in \(s_1\).

Definition 2.5 A state diagram is a graph defined inductively. The initial state diagram contains a single node labeled with the initial state. If state \(s_1\) labels a node in the state diagram, and if there is a transition from \(s_1\) to \(s_2\), then there is a node labeled \(s_2\) in the state diagram and a directed edge from \(s_1\) to \(s_2\).

For each state, there is only one node labeled with that state.

The set of reachable states is the set of states in a state diagram.

It follows from the definitions that a computation (scenario) of a concurrent pro- gram is represented by a directed path through the state diagram starting from the initial state, and that all computations can be so represented. Cycles in the state diagram represent the possibility of infinite computations in a finite graph.

The state diagram for Algorithm 2.1 shows that there are two different scenarios, each of which contains three of the five reachable states.

Scenarios

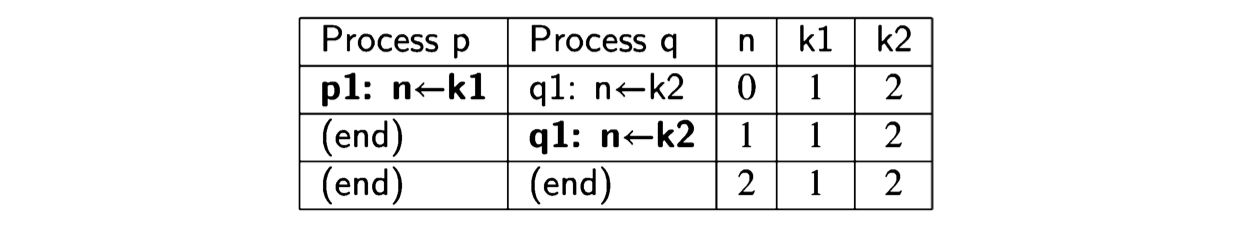

A scenario is defined by a sequence of states. Since diagrams can be hard to draw, especially for large programs, it is convenient to use a tabular representation of scenarios. This is done simply by listing the sequence of states in a table; the columns for the control pointers are labeled with the processes and the columns for the variable values with the variable names. The following table shows the scenario of Algorithm 2.1 corresponding to the lefthand path:

In a state, there may be more than one statement that can be executed. We use bold font to denote the statement that was executed to get to the state in the following row.

| Rows represent states. If the statement executed is an assignment statement, the new value that is assigned to the variable is a component of the next state in the scenario, which is found in the next row. |

|---|

Justification of the abstraction

Clearly, it doesn’t make sense to talk of the global state of a computer system, or of coordination between computers at the level of individual instructions. The electrical signals in a computer travel at the speed of light, about \( 2·10^8 \frac{m}{sec}\), and the clock cycles of modern CPUs are at least one gigahertz, so information cannot travel more than \( 2x10^8· 10^{-9} = 0.2 m\) during a clock cycle of a CPU. There is simply not enough time to coordinate individual instructions of more than one CPU.

Nevertheless, that is precisely the abstraction that we will use! We will assume

that we have a bird’s-eye view of the global state of the system, and that a state-

ment of one process executes by itself and to completion, before the execution of a

statement of another process commences.

It is a convenient fiction to regard the execution of a concurrent program as being carried out by a global entity who at each step selects the process from which the next statement will be executed. The term interleaving comes from this image: just as you might interleave the cards from several decks of playing cards by selecting cards one by one from the decks, so we regard this entity as interleaving statements by selecting them one by one from the processes. The interleaving is arbitrary, that is—with one exception to be discussed in Section 2.7—we do not restrict the choice of the process from which the next statement is taken.

The abstraction defined is highly artificial, so we will spend some time justifying it for various possible computer architectures.

Multitasking systems

Consider the case of a concurrent program that is being executed by multitasking, that is, by sharing the resources of one computer. Obviously, with a single CPU there is no question of the simultaneous execution of several instructions. The se- lection of the next instruction to execute is carried out by the CPU and the operating system. Normally, the next instruction is taken from the same process from which the current instruction was executed; occasionally, interrupts from I/O devices or internal timers will cause the execution to be interrupted. A new process called an interrupt handler will be executed, and upon its completion, an operating system function called the scheduler may be invoked to select a new process to execute.

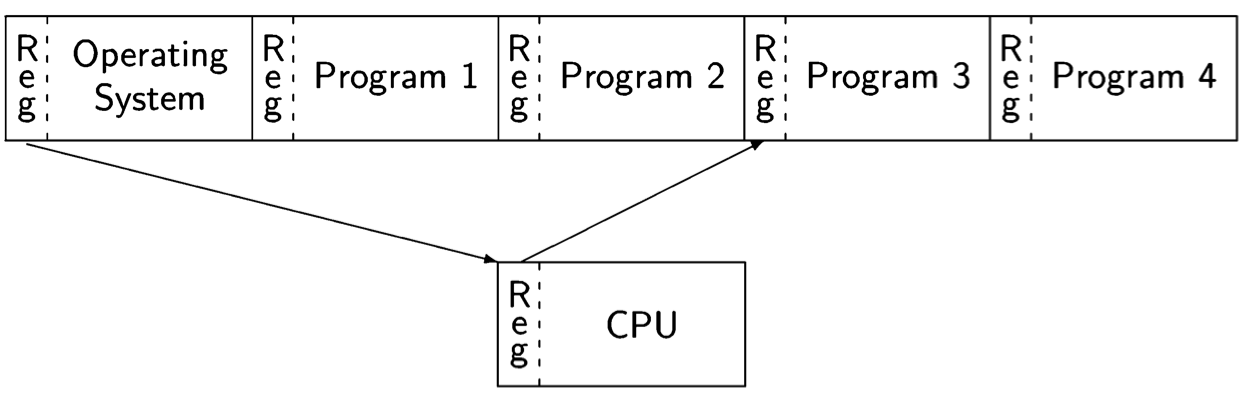

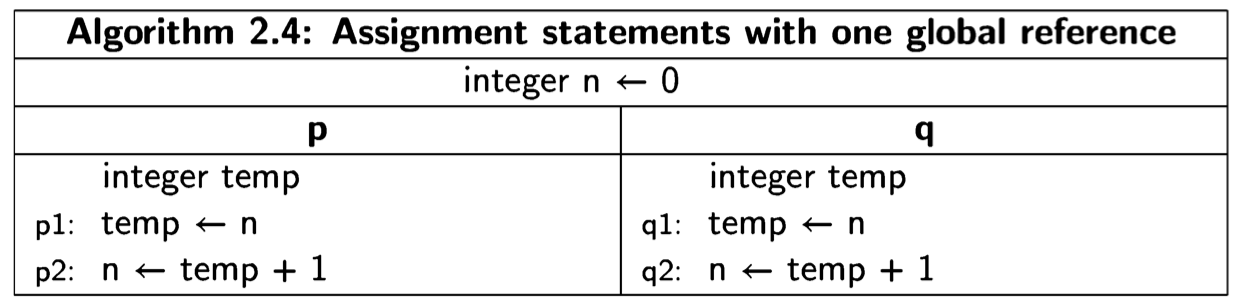

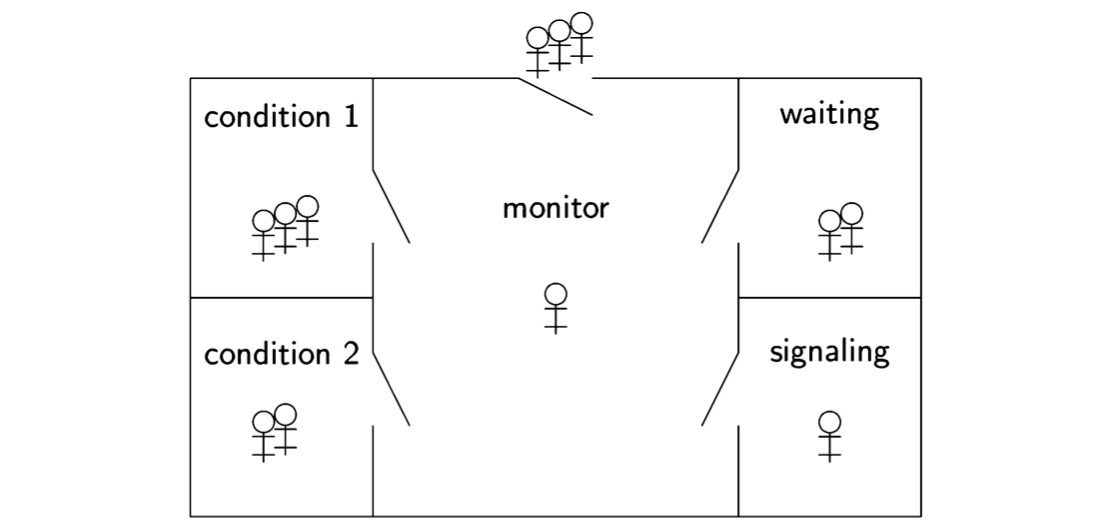

This mechanism is called a context switch. The diagram below shows the memory divided into five segments, one for the operating system code and data, and four for the code and data of the programs that are running concurrently:

When the execution is interrupted, the registers in the CPU (not only the registers used for computation, but also the control pointer and other registers that point to the memory segment used by the program) are saved into a prespecified area in the program’s memory. Then the register contents required to execute the interrupt handler are loaded into the CPU. At the conclusion of the interrupt processing, the symmetric context switch is performed, storing the interrupt handler registers and loading the registers for the program. The end of interrupt processing is a convenient time to invoke the operating system scheduler, which may decide to perform the context switch with another program, not the one that was interrupted.

In a multitasking system, the non-intuitive aspect of the abstraction is not the in- terleaving of atomic statements (that actually occurs), but the requirement that any arbitrary interleaving is acceptable. After all, the operating system scheduler may only be called every few milliseconds, so many thousands of instructions will be executed from each process before any instructions are interleaved from another. We defer a discussion of this important point to Section 2.4.

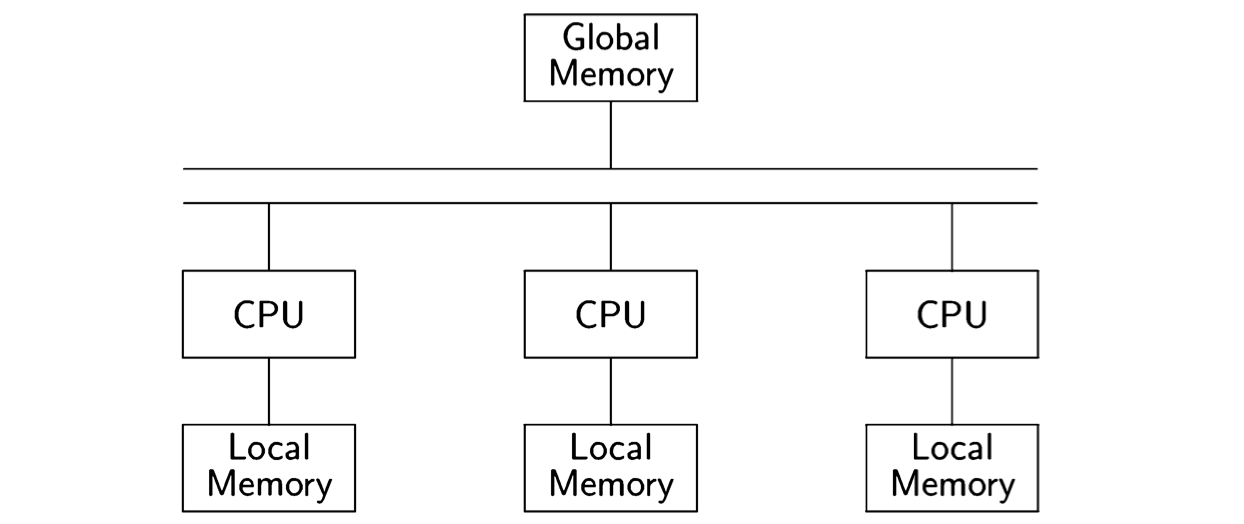

Multiprocessor computers

A multiprocessor computer is a computer with more than one CPU. The memory is physically divided into banks of local memory, each of which can be accessed only by one CPU, and global memory, which can be accessed by all CPUs:

If we have a sufficient number of CPUs, we can assign each process to its own CPU. The interleaving assumption no longer corresponds to reality, since each CPU is executing its instructions independently. Nevertheless, the abstraction is useful here.

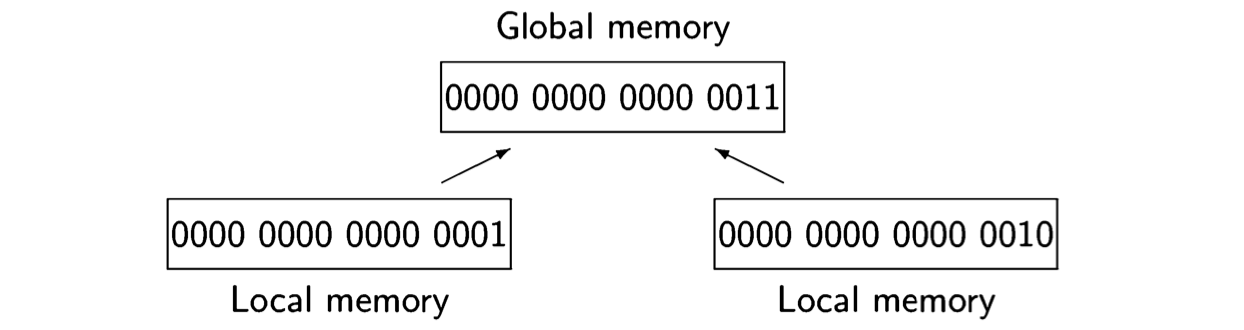

As long as there is no contention, that is, as long as two CPUs do not attempt to access the same resource (in this case, the global memory), the computations defined by interleaving will be indistinguishable from those of truly parallel exe- cution. With contention, however, there is a potential problem. The memory of a computer is divided into a large number of cells that store data which is read and written by the CPU. Eight-bit cells are called bytes and larger cells are called words, but the size of a cell is not important for our purposes. We want to ask what might happen if two processors try to read or write a cell simultaneously so that the operations overlap. The following diagram indicates the problem:

It shows 16-bit cells of local memory associated with two processors; one cell contains the value 0 ... 01 and one contains 0 ... 10 = 2. If both processors write to the cell of global memory at the same time, the value might be undefined; for example, it might be the value 0 ... 11 = 3 obtained by or’ing together the bit representations of 1 and 2.

In practice, this problem does not occur because memory hardware is designed so that (for some size memory cell) one access completes before the other com- mences. Therefore, we can assume that if two CPUs attempt to read or write the same cell in global memory, the result is the same as if the two instructions were executed in either order. In effect, atomicity and interleaving are performed by the hardware.

Other less restrictive abstractions have been studied; we will give one example of an algorithm that works under the assumption that if a read of a memory cell over- laps a write of the same cell, the read may return an arbitrary value (Section 5.3).

The requirement to allow arbitrary interleaving makes a lot of sense in the case of a multiprocessor; because there is no central scheduler, any computation resulting from interleaving may certainly occur.

Distributed systems

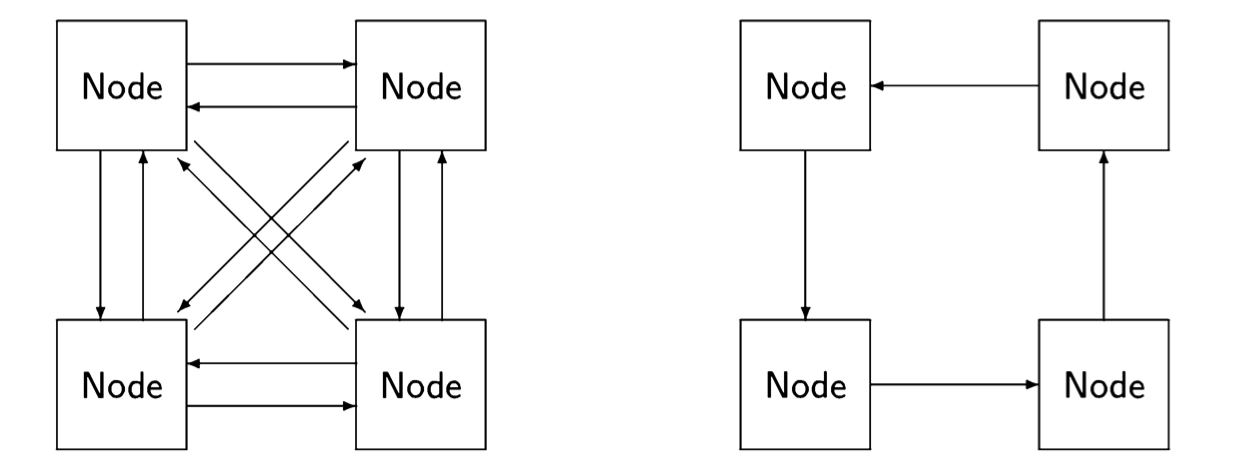

A distributed system is composed of several computers that have no global resources; instead, they are connected by communications channels enabling them to send messages to each other. The language of graph theory is used in discussing distributed systems; each computer is a node and the nodes are connected by (di-rected) edges. The following diagram shows two possible schemes for interconnecting nodes: on the left, the nodes are fully connected while on the right they are connected in a ring:

In a distributed system, the abstraction of interleaving is, of course, totally false, since it is impossible to coordinate each node in a geographically distributed sys- tem. Nevertheless, interleaving is a very useful fiction, because as far as each node is concerned, it only sees discrete events: it is either executing one of its own statements, sending a message or receiving a message. Any interleaving of all the events of all the nodes can be used for reasoning about the system, as long as the interleaving is consistent with the statement sequences of each individual node and with the requirement that a message be sent before it is received.

Distributed systems are considered to be distinct from concurrent systems. In a concurrent system implemented by multitasking or multiprocessing, the global memory is accessible to all processes and each one can access the memory efficiently. In a distributed system, the nodes may be geographically distant from each other, so we cannot assume that each node can send a message directly to all other nodes. In other words, we have to consider the topology or connectedness of the system, and the quality of an algorithm (its simplicity or efficiency) may be dependent on a specific topology. A fully connected topology is extremely efficient in that any node can send a message directly to any other node, but it is extremely expensive, because for n nodes, we need \( n · (n-1) \approx n^2 \) communications channels. The ring topology has minimal cost in that any node has only one communications line associated with it, but it is inefficient, because to send a message from one arbitrary node to another we may need to have it relayed through up to \( n - 2 \) other nodes.

A further difference between concurrent and distributed systems is that the behavior of systems in the presence of faults is usually studied within distributed systems. In a multitasking system, hardware failure is usually catastrophic since it affects all processes, while a software failure may be relatively innocuous (if the process simply stops working), though it can be catastrophic (if it gets stuck in an infinite loop at high priority). In a distributed system, while failures can be catastrophic for single nodes, it is usually possible to diagnose and work around a faulty node, because messages may be relayed through alternate communication paths. In fact, the success of the Internet can be attributed to the robustness of its protocols when individual nodes or communications channels fail.

Arbitrary interleaving

We have to justify the use of arbitrary interleavings in the abstraction. What this means, in effect, is that we ignore time in our analysis of concurrent programs. For example, the hardware of our system may be such that an interrupt can occur only once every millisecond. Therefore, we are tempted to assume that several thousand statements are executed from a single process before any statements are executed from another. Instead, we are going to assume that after the execution of any statement, the next statement may come from any process. What is the justification for this abstraction?

The abstraction of arbitrary interleaving makes concurrent programs amenable to formal analysis, and as we shall see, formal analysis is necessary to ensure the correctness of concurrent programs. Arbitrary interleaving ensures that we only have to deal with finite or countable sequences of statements \( a_1,a_2,a_3, \dots,\) and need not analyze the actual time intervals between the statements. The only relation between the statements is that a; precedes or follows (or immediately precedes or follows) \(a_j\). Remember that we did not specify what the atomic statements are, so you can choose the atomic statements to be as coarse-grained or as fine-grained as you wish. You can initially write an algorithm and prove its correctness under the assumption that each function call is atomic, and then refine the algorithm to assume only that each statement is atomic.

The second reason for using the arbitrary interleaving abstraction is that it enables us to build systems that are robust to modification of their hardware and software. Systems are always being upgraded with faster components and faster algorithms. If the correctness of a concurrent program depended on assumptions about time of execution, every modification to the hardware or software would require that the system be rechecked for correctness (see [62] for an example). For example, suppose that an operating system had been proved correct under the assumption that characters are being typed in at no more than 10 characters per terminal per second. That is a conservative assumption for a human typist, but it would become invalidated if the input were changed to come from a communications channel.

The third reason is that it is difficult, if not impossible, to precisely repeat the execution

of a concurrent program. This is certainly true in the case of systems that

accept input from humans, but even in a fully automated system, there will always

be some jitter, that is some unevenness in the timing of events. A concurrent

program cannot be debugged in the familiar sense of diagnosing a problem,

correcting the source code, recompiling and rerunning the program to check if the bug

still exists. Rerunning the program may just cause it to execute a different scenario

than the one where the bug occurred. The solution is to develop programming and

verification techniques that ensure that a program is correct under all interleavings.

Atomic statements

The concurrent programming abstraction has been defined in terms of the interleaving

of atomic statements. What this means is that an atomic statement is executed

to completion without the possibility of interleaving statements from another

process. An important property of atomic statements is that if two are executed

simultaneously, the result is the same as if they had been executed sequentially

(in either order). The inconsistent memory store shown on page 15 will not occur.

It is important to specify the atomic statements precisely, because the correctness of an algorithm depends on this specification. We start with a demonstration of the effect of atomicity on correctness, and then present the specification used in this book.

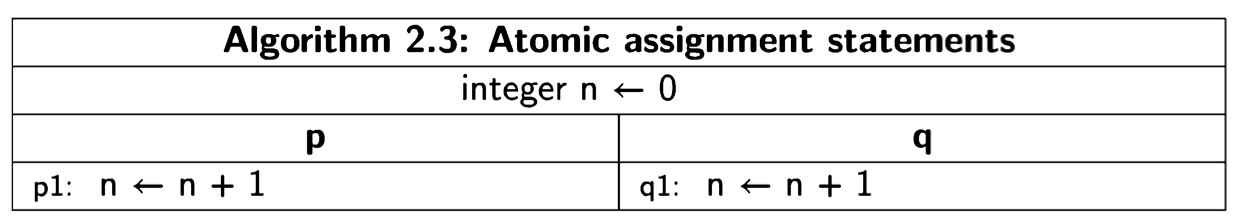

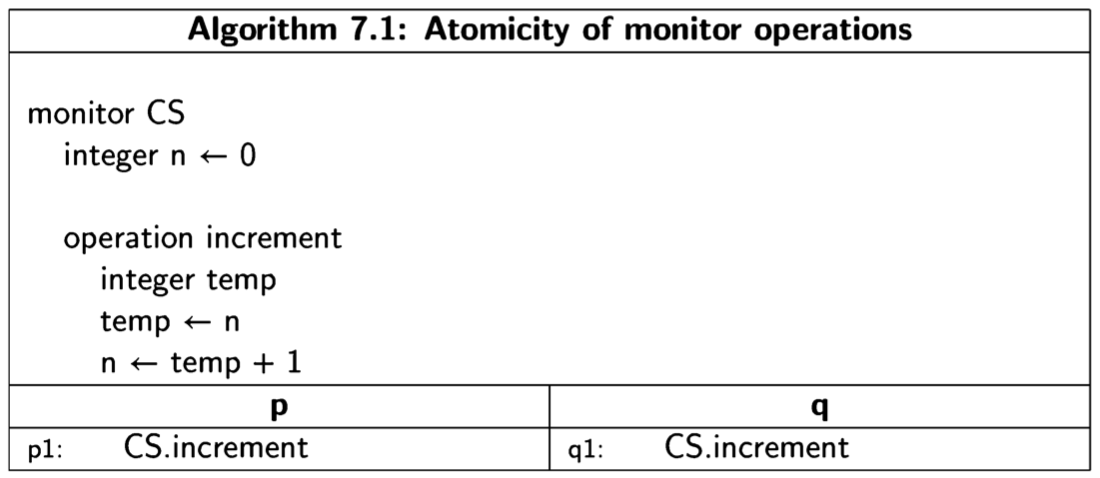

Recall that in our algorithms, each labeled line represents an atomic statement. Consider the following trivial algorithm:

There are two possible scenarios:

In both scenarios, the final value of the global variable n is 2, and the algorithm is a correct concurrent algorithm with respect to the postcondition \(n = 2\).

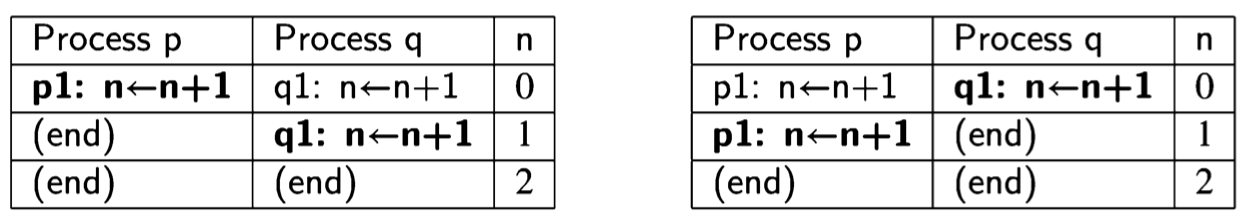

Now consider a modification of the algorithm, in which each atomic statement references the global variable n at most once:

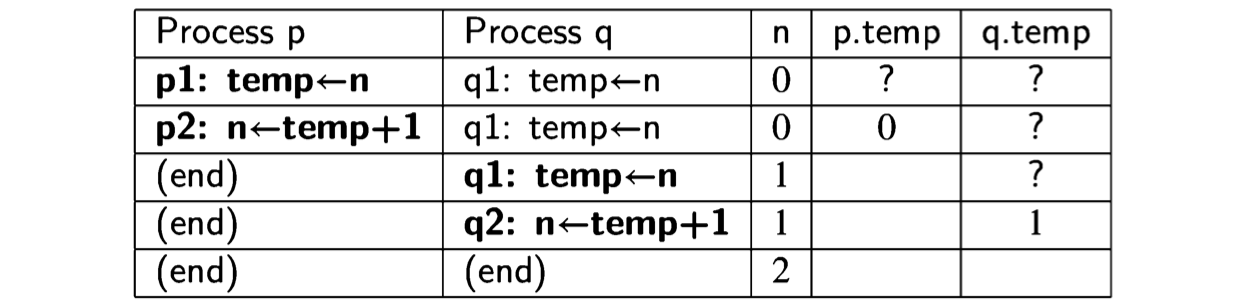

There are scenarios of the algorithm that are also correct with respect to the post- condition \(n = 2\):

As long as p1 and p2 are executed immediately one after the other, and similarly for q1 and q2, the result will be the same as before, because we are simulating the execution of \(n\leftarrow n+1\) with two statements. However, other scenarios are possible in which the statements from the two processes are interleaved:

Clearly, Algorithm 2.4 is not correct with respect to the postcondition\(n = 2\).

We learn from this simple example that the correctness of a concurrent program is relative to the specification of the atomic statements. The convention in the book is that:

| Assignment statements are atomic statements, as are evaluations of boolean conditions in control statements. |

|---|

This assumption is not at all realistic, because computers do not execute source code assignment and control statements; rather, they execute machine-code instructions that are defined at a much lower level. Nevertheless, by the simple expedient of defining local variables as we did above, we can use this simple model to demonstrate the same behaviors of concurrent programs that occur when machine-code instructions are interleaved. The source code programs might look artificial, but the convention spares us the necessity of using machine code during the study of concurrency. For completeness, Section 2.8 provides more detail on the interleaving of machine-code instructions.

Correctness

In sequential programs, rerunning a program with the same input will always give

the same result, so it makes sense to debug a program: run and rerun the program

with breakpoints until a problem is diagnosed; fix the source code; rerun to check

if the output is not correct. In a concurrent program, some scenarios may give the

correct output while others do not. You cannot debug a concurrent program in the

normal way, because each time you run the program, you will likely get a different

scenario. The fact that you obtain the correct answer may just be a fluke of the

particular scenario and not the result of fixing a bug.

In concurrent programming, we are interested in problems—like the problem with

Algorithm 2.4—that occur as a result of interleaving. Of course, concurrent programs

can have ordinary bugs like incorrect bounds of loops or indices of arrays,

but these present no difficulties that were not already present in sequential programming.

The computations in examples are typically trivial such as incrementing a

single variable, and in many cases, we will not even specify the actual computations

that are done and simply abstract away their details, leaving just the name of a

procedure, such as critical section. Do not let this fool you into thinking that

concurrent programs are toy programs; we will use these simple programs to develop

algorithms and techniques that can be added to otherwise correct programs (of any

complexity) to ensure that correctness properties are fulfilled in the presence of

interleaving.

For sequential programs, the concept of correctness is so familiar that the formal definition is often neglected. (For reference, the definition is given in Appendix B.) Correctness of (non-terminating) concurrent programs is defined in terms of properties of computations, rather than in terms of computing a functional result. There are two types of correctness properties:

Safety properties The property must always be true.

Liveness properties The property must eventually become true.

More precisely, for a safety property P to hold, it must be true that in every state of every computation, P is true. For example, we might require as a safety property of the user interface of an operating system: Always, a mouse cursor is displayed. If we can prove this property, we can be assured that no customer will ever complain that the mouse cursor disappears, no matter what programs are running on the system.

For a liveness property P to hold, it must be true that in every computation there is some state in which P is true. For example, a liveness property of an operating

system might be: If you click on a mouse button, eventually the mouse cursor will change shape. This specification allows the system not to respond immediately to the click, but it does ensure that the click will not be ignored indefinitely.

It is very easy to write a program that will satisfy a safety property. For example, the following program for an operating system satisfies the safety property Always, a mouse cursor is displayed:

while true

display the mouse cursor

I seriously doubt if you would find users for an operating system whose only feature is to display a mouse cursor. Furthermore, safety properties often take the form of Always, something “bad” is not true, and this property is trivially satisfied by an empty program that does nothing at all. The challenge is to write concurrent programs that do useful things—thus satisfying the liveness properties—without violating the safety properties.

Safety and liveness properties are duals of each other. This means that the negation of a safety property is a liveness property and vice versa. Suppose that we want to prove the safety property Always, a mouse cursor is displayed. The negation of this property is a liveness property: Eventually, no mouse cursor will be dis- played. The safety property will be true if and only if the liveness property is false. Similarly, the negation of the liveness property if you click on a mouse button, eventually the cursor will change shape, can be expressed as Once a button has been clicked, always, the cursor will not change its shape. The liveness property is true if this safety property is false. One of the forms will be more natural to use in a specification, though the dual form may be easier to prove.

Because correctness of concurrent programs is defined on all scenarios, it is impossible to demonstrate the correctness of a program by testing it. Formal methods have been developed to verify concurrent programs, and these are extensively used in critical systems.

Linear and branching temporal logics

The formalism we use in this book for expressing correctness properties and veri- fying programs is called linear temporal logic (LTL). LTL expresses properties that must be true at a state in a single (but arbitrary) scenario. Branching temporal logic is an alternate formalism; in these logics, you can express that for a property to be true at state, it must be true in some or all scenarios starting from the state. CTL [24] is a branching temporal logic that is widely used especially in the verification of computer hardware. Model checkers for CTL are SMV and NuSMV. LTL is used in this book both because it is simpler and because it is more appropriate for reasoning about software algorithms.

Fairness

There is one exception to the requirement that any arbitrary interleaving is a valid execution of a concurrent program. Recall that the concurrent programming ab- straction is intended to represent a collection of independent computers whose in- structions are interleaved. While we clearly stated that we did not wish to assume anything about the absolute speeds at which the various processors are executing, it does not make sense to assume that statements from any specific process are never selected in the interleaving.

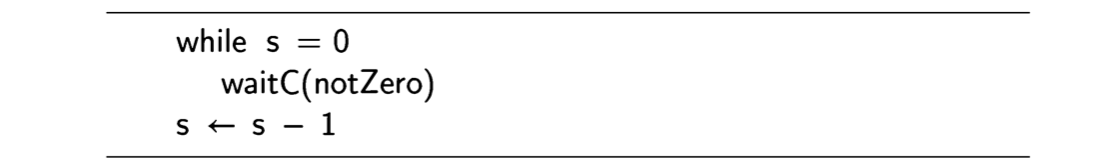

Definition 2.6 A scenario is (weakly) fair if at any state in the scenario, a statement that is continually enabled eventually appears in the scenario.

If after constructing a scenario up to the ith state \( s_0, s_1, \dots, s_i\), the control pointer of a process p points to a statement pj that is continually enabled, then \(p_j\) will appear in the scenario as \( s_k \), for \( k > i \). Assignment and control statements are continually enabled. Later we will encounter statements that may be disabled, as well as other (stronger) forms of fairness.

Consider the following algorithm:

Let us ask the question: does this algorithm necessarily halt? That is, does the algorithm halt for all scenarios? Clearly, the answer is no, because one scenario is \( p_1, p_2, p_1, p_2 \dots\), in which p1 and then p2 are always chosen, and q1 is never chosen. Of course this is not what was intended. Process q is continually ready to run because there is no impediment to executing the assignment to flag, so the non-terminating scenario is not fair. If we allow only fair scenarios, then eventually an execution of q1 must be included in every scenario. This causes process q to terminate immediately, and process p to terminate after executing at most two more statements. We will say that under the assumption of weak fairness, the algorithm is correct with respect to the claim that it always terminates.

Machine-code instructions

Algorithm 2.4 may seem artificial, but it a faithful representation of the actual im- plementation of computer programs. Programs written in a programming language like Ada or Java are compiled into machine code. In some cases, the code is for a specific processor, while in other cases, the code is for a virtual machine like the Java Virtual Machine (JVM). Code for a virtual machine is then interpreted or a further compilation step is used to obtain code for a specific processor. While there are many different computer architectures—both real and virtual—they have much in common and typically fall into one of two categories.

Register machines

A register machine performs all its computations in a small amount of high-speed memory called registers that are an integral part of the CPU. The source code of the program and the data used by the program are stored in large banks of memory, so that much of the machine code of a program consists of load instructions, which move data from memory to a register, and store instructions, which move data from a register to memory. load and store of a memory cell (byte or word) is atomic.

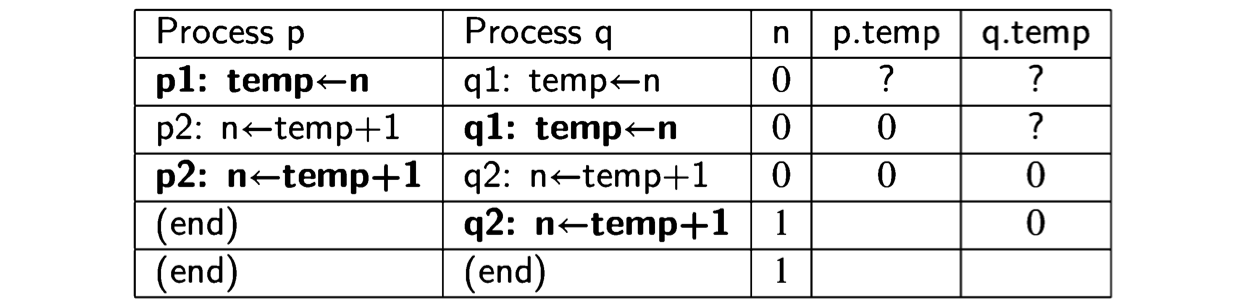

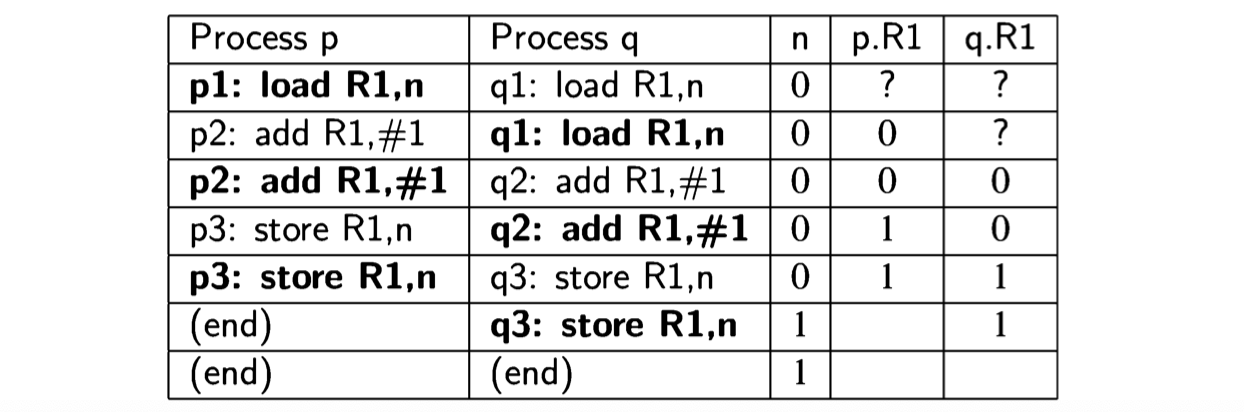

The following algorithm shows the code that would be obtained by compiling Algorithm 2.3 for a register machine:

The notation add R1, #1 means that the value 1 is added to the contents of register R1, rather than the contents of the memory cell whose address is 1.

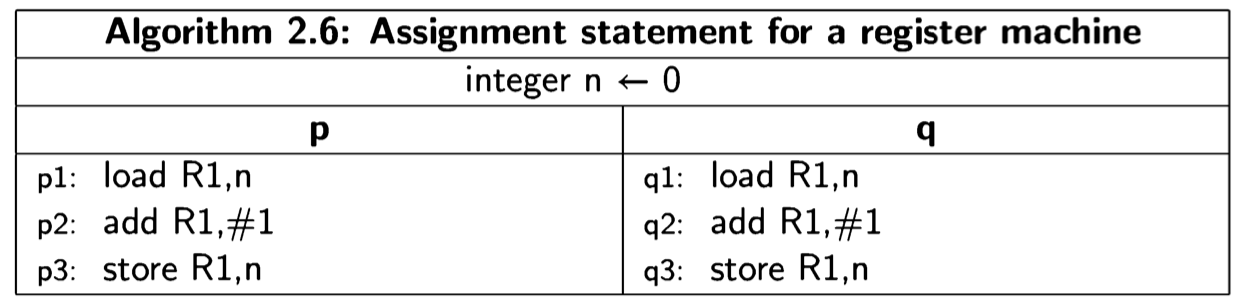

The following diagram shows the execution of the three instructions:

First, the value stored in the memory cell for n is loaded into one of the registers; second, the value is incremented within the register; and third, the value is stored back into the memory cell.

Ostensibly, both processes are using the same register R1, but in fact, each process keeps its own copy of the registers. This is true not only on a multiprocessor or distributed system where each CPU has its own set of registers, but even on a multitasking single-CPU system, as described in Section 2.3. The context switch mechanism enables each process to run within its own context consisting of the current data in the computational registers and other registers such as the control pointer. Thus we can look upon the registers as analogous to the local variables temp in Algorithm 2.4 and a bad scenario exists that is analogous to the bad scenario for that algorithm:

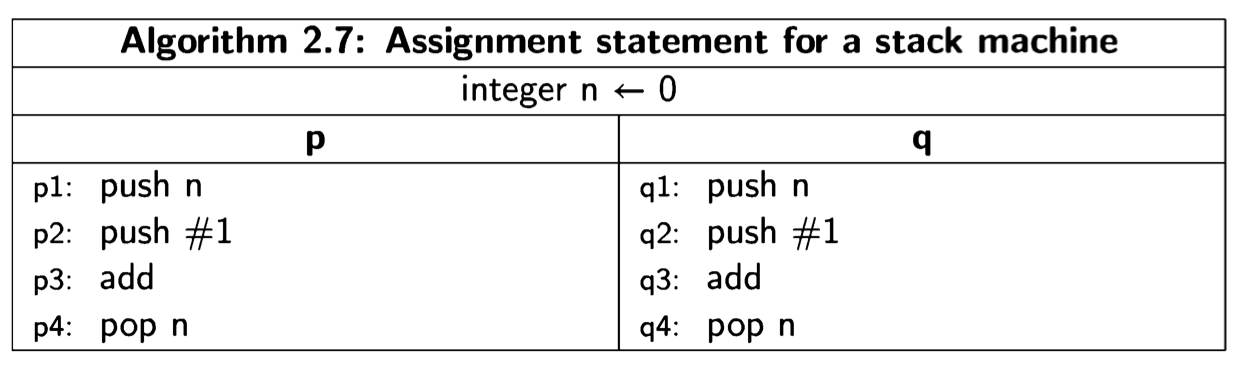

Stack machines

The other type of machine architecture is the stack machine. In this architecture, data is held not in registers but on a stack, and computations are implicitly performed on the top elements of a stack. The atomic instructions include push and pop, as well as instructions that perform arithmetical, logical and control operations on elements of the stack. In the register machine, the instruction add R1,#1 explicitly mentions its operands, while in a stack machine the instruction would simply be written add, and it would add the values in the top two positions in the stack, leaving the result on the top in place of the two operands:

The following diagram shows the execution of these instructions on a stack machine:

Initially, the value of the memory cell for n is pushed onto the stack, along with the constant 1. Then the two top elements of the stack are added and replaced by one element with the result. Finally (on the right), the result is popped off the stack and stored in the memory cell. Each process has its own stack, so the top of the stack, where the computation takes place, is analogous to a local variable.

It is easier to write code for a stack machine, because all computation takes place in one place, whereas in a register machine with more than one computational register you have to deal with the allocation of registers to the various operands. This is a non-trivial task that is discussed in textbooks on compilation. Furthermore, since different machines have different numbers and types of registers, code for register machines is not portable. On the other hand, operations with registers are extremely fast, whereas if a stack is implemented in main memory access to operands will be much slower.

For these reasons, stack architectures are common in virtual machines where sim- plicity and portability are most important. When efficiency is needed, code for the virtual machine can be compiled and optimized for a specific real machine. For our purposes the difference is not great, as long as we have the concept of memory that may be either global to all processes or local to a single process. Since global and local variables exist in high-level programming languages, we do not need to discuss machine code at all, as long as we understand that some local variables are being used as surrogates for their machine-language equivalents.

Source statements and machine instructions

We have specified that source statements like

| \[ n \leftarrow n + 1 \] |

|---|

are atomic. As shown in Algorithm 2.6 and Algorithm 2.7, such source statements must be compiled into a sequence of machine language instructions. (On some computers n<-n+1 can be compiled into an atomic increment instruction, but this is not true for a general assignment statement.) This increases the number of interleavings and leads to incorrect results that would not occur if the source statement were really executed atomically.

However, we can study concurrency at the level of source statements by decompos- ing atomic statements into a sequence of simpler source statements. This is what we did in Algorithm 2.4, where the above atomic statement was decomposed into the pair of statements:

| \[ temp \leftarrow n + 1 \] \[ n \leftarrow temp + 1 \] |

|---|

The concept of a simple source statement can be formally defined:

Definition 2.7 An occurrence of a variable v is defined to be critical reference: (a) if it is assigned to in one process and has an occurrence in another process, or (b) if it has an occurrence in an expression in one process and is assigned to in another.

A program satisfies the limited-critical-reference (LCR) restriction if each statement contains at most one critical reference.

Consider the first occurrence of n in \(n \leftarrow n + 1\). It is assigned to in process p and has (two) occurrences in process q, so it is critical by (a). The second occurrence of n in \(n \leftarrow n + 1\) is critical by (b) because it appears in the expression \(n+1\) in p and is also assigned to in q. Consider now the version of the statements that uses local variables. Again, the occurrences of n are critical, but the occurrences of temp are not. Therefore, the program satisfies the LCR restriction. Concurrent programs that satisfy the LCR restriction yield the same set of behaviors whether the statements are considered atomic or are compiled to a machine architecture with atomic load and store. See [50, Section 2.2] for more details.

The more advanced algorithms in this book do not satisfy the LCR restriction, but can easily be transformed into programs that do satisfy it. The transformed programs may require additional synchronization to prevent incorrect scenarios, but the additions are simple and do not contribute to understanding the concepts of the advanced algorithms.

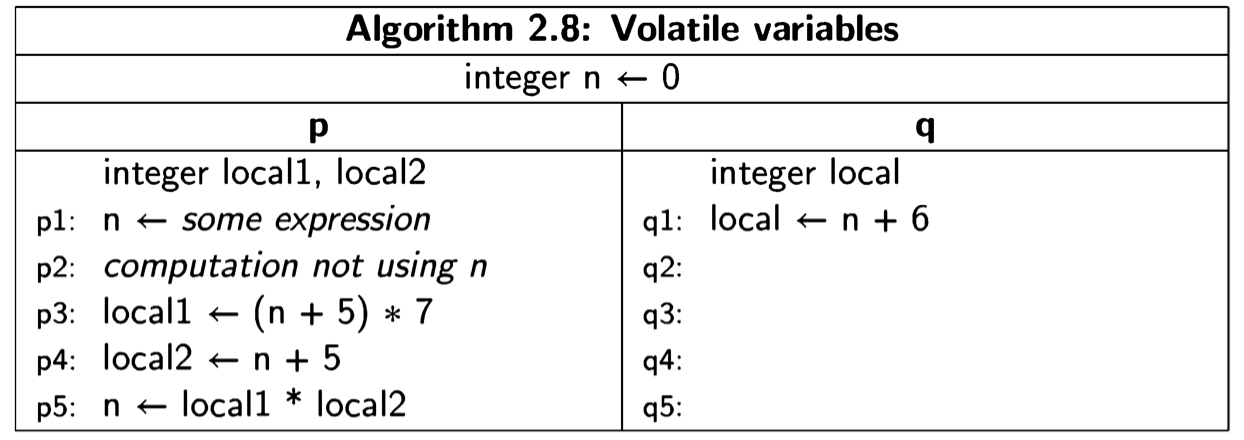

Volatile and non-atomic variables

There are further complications that arise from the compilation of high-level languages into machine code. Consider for example, the following algorithm:

The single statement in process q can be interleaved at any place during the ex- ecution of the statements of p. Because of optimization during compilation, the computation in q may not use the most recent value of n. The value of n may be maintained in a register from the assignment in p1 through the computations in p2, p3 and p4, and only actually stored back into n at statement p5. Furthermore, the compiler may re-order p3 and 4 to take advantage of the fact that the value of n+5 needed in p3 is computed in p4.

These optimizations have no semantic effect on sequential programs, but they do in concurrent programs that use global variables, so they can cause programs to be incorrect. Specifying a variable as volatile instructs the compiler to load and store the value of the variable at each use, rather than attempt to optimize away these loads and stores.

Concurrency may also affect computations with multiword variables. A load or store of a full-word variable (32 bits on most computers) is accomplished atomically, but if you need to load or store longer variables (like higher precision numbers), the operation might be carried out non-atomically. A load from another process might be interleaved between storing the lower half and the upper half of a 64-bit variable. If the processor is not able to ensure atomicity for multiword variables, it can be implemented using a synchronization mechanism such as those to be discussed throughout the book. However, these mechanisms can block processes, which may not be acceptable in a real-time system; a non-blocking algorithm for consistent access to multiword variables is presented in Section 13.9.

Concurrency in Java

The Java language has supported concurrency from the very start. However, the concurrency constructs have undergone modification; in particular, several dangerous constructs have been deprecated (dropped from the language). The latest version (1.5) has an extensive library of concurrency constructs java.util.concurrent, designed by a team led by Doug Lea. For more information on this library see the Java documentation and [44]. Lea’s book explains how to integrate concurrency within the framework of object-oriented design and programming a topic not covered in this book.

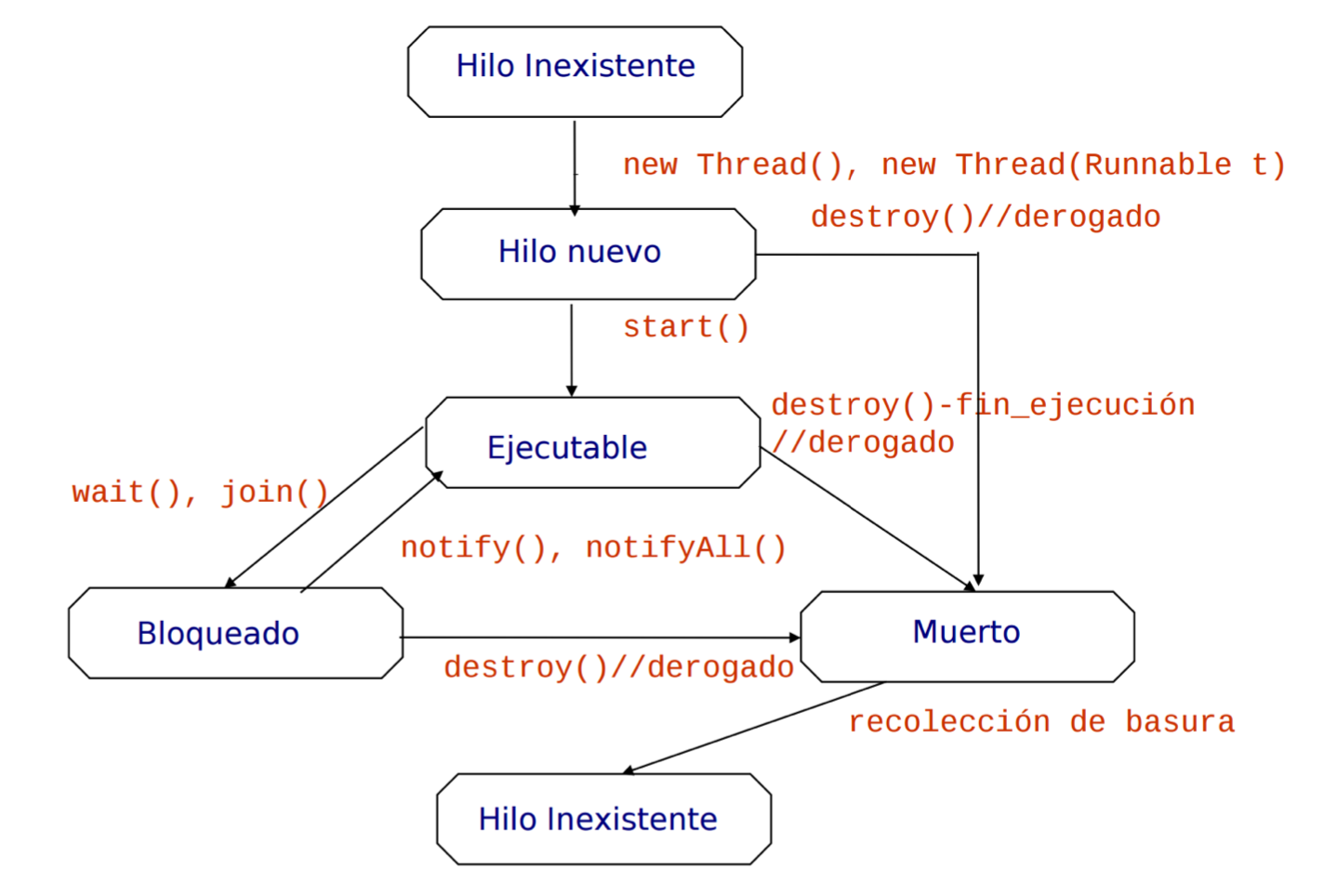

The Java language supports concurrency through objects of type Thread. You can write your own classes that extend this type as shown in Listing 2.4. Within a class that extends Thread, you have to define a method called run that contains the code to be run by this process. Once the class has been defined, you can define fields of this type and assign to them objects created by allocators (lines 11-12). However, allocation and construction of a Thread object do not cause the thread to run. You must explicitly call the start method on the object (lines 13-14), which in turn will call the run method.

The global variable n is declared to be static so that we will have one copy that is shared by all of the objects declared to be of this class. The main method of the class is used to allocate and initiate the two processes; we then use the join method to wait for termination of the processes so that we can print out the value of n. Invocations of most thread methods require that you catch (or throw) InterruptedException .

If you run this program, you will almost certainly get the answer 20, because it is

highly unlikely that there will be a context switch from one task to another during

the loop. You can artificially introduce context switches between between the two

assignment statements of the loop: static method Thread.yield() causes the

currently executing thread to temporarily cease execution, thus allowing other threads

to execute.

Since Java does not have multiple inheritance, it is usually not a good idea to extend

the class Thread as this will prevent extending any other class. Instead, any class

can contain a thread by implementing the interface Runnable:

class Count implements Runnable {

@override

public void run() { ... }

}

Threads are created from Runnable objects as follows:

Count count = new Count();

Thread t1 = new Thread(count);

or simply:

Thread tl = new Thread(new Count());

Volatile

A variable can be declared as volatile (Section 2.9):

volatile int n = 0;

Variables of primitive types except long and double are atomic, and long and

double are also atomic if they are declared to be volatile. A reference variable is

atomic, but that does not mean that the object pointed to is atomic. Similarly, if a

reference variable is declared as volatile it does not follow that the object pointed

to is volatile. Arrays can be declared as volatile, but not components of an array.

The Critical Section Problem

TL;DR

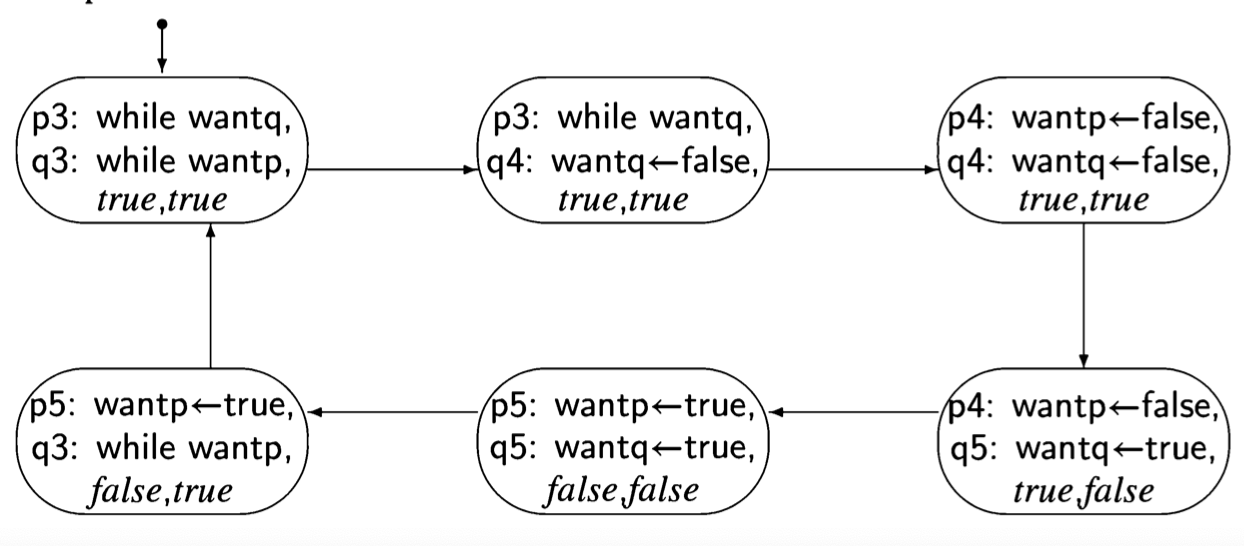

This chapter introduced the basic concepts of writing a concurrent program to solve a problem. Even in the simplest model of atomic load and store of shared memory, Dekker’s algorithm can solve the critical section problem, although it leaves much to be desired in terms of simplicity and efficiency. Along the journey to Dekker’s algorithm, we have encountered numerous ways in which concurrent programs can be faulty. Since it is impossible to check every possible scenario for faults, state diagrams are an invaluable tool for verifying the correctness of concurrent algorithms.

The next chapter will explore verification of concurrent programming in depth, first using invariant assertions which are relatively easy to understand and use, and then using deductive proofs in temporal logic which are more sophisticated. Finally, we show how programs can be verified using the Spin model checker.

Introduccion

This chapter presents a sequence of attempts to solve the critical section problem for two processes, culminating in Dekker’s algorithm. The synchronization mech- anisms will be built without the use of atomic statements other than atomic load and store. Following this sequence of algorithms, we will briefly present solutions to the critical section problem that use more complex atomic statements. In the next chapter, we will present algorithms for solving the critical section problem for N processes.

Algorithms like Dekker’s algorithm are rarely used in practice, primarily because real systems support higher synchronization primitives like those to be discussed in subsequent chapters (though see the discussion of “fast” algorithms in Section 5.4). Nevertheless, this chapter is the heart of the book, because each incorrect algorithm demonstrates some pathological behavior that is typical of concurrent algorithms. Studying these behaviors within the framework of these elementary algorithms will prepare you to identify and correct them in real systems.

The proofs of the correctness of the algorithms will be based upon the explicit construction of state diagrams in which all scenarios are represented. Some of the proofs are left to the next chapter, because they require more complex tools, in particular the use of temporal logic to specify and verify correctness properties.

The definition of the problem

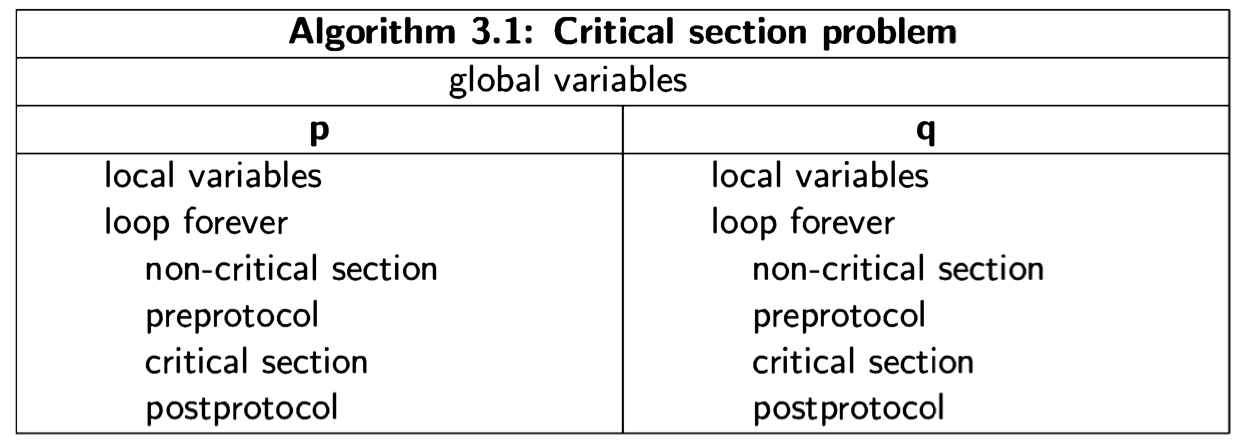

We start with a specification of the structure of the critical section problem and the assumptions under which it must be solved:

-

Each of N processes is executing in a infinite loop a sequence of statements that can be divided into two subsequences: the critical section and the non- critical section.

-

The correctness specifications required of any solution are:

-

Mutual exclusion Statements from the critical sections of two or more pro- cesses must not be interleaved.

-

Freedom from deadlock If some processes are trying to enter their critical sections, then one of them must eventually succeed.

-

Freedom from (individual) starvation If any process tries to enter its crit- ical section, then that process must eventually succeed.

-

-

A synchronization mechanism must be provided to ensure that the correctness requirements are met. The synchronization mechanism consists of additional statements that are placed before and after the critical section. The statements placed before the critical section are called the preprotocol and those after it are called the postprotocol. Thus, the structure of a solution for two processes is as follows:

-

The protocols may require local or global variables, but we assume that no variables used in the critical and non-critical sections are used in the proto- cols, and vice versa.

-

The critical section must progress, that is, once a process starts to execute the statements of its critical section, it must eventually finish executing those statements.

-

The non-critical section need not progress, that is, if the control pointer of a process is at or in its non-critical section, the process may terminate or enter an infinite loop and not leave the non-critical section.

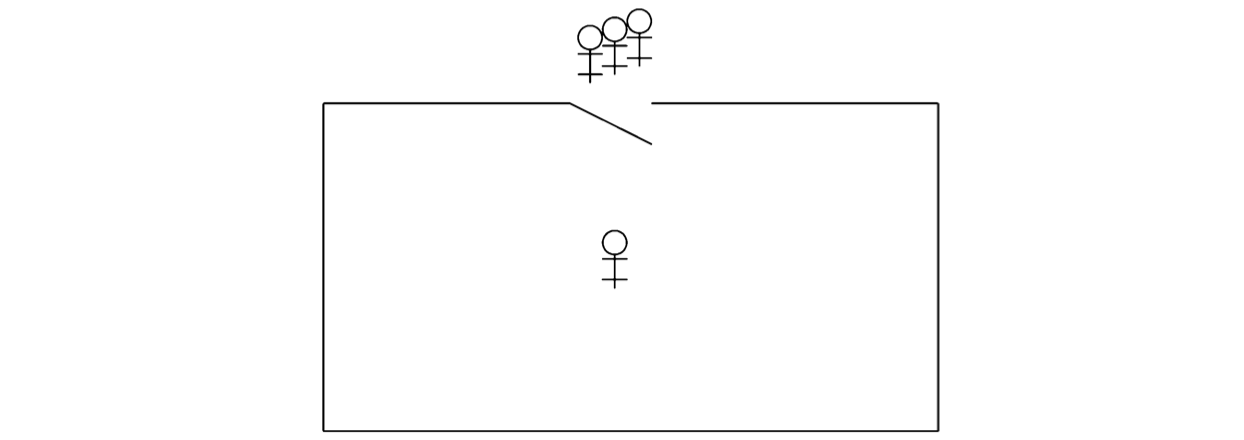

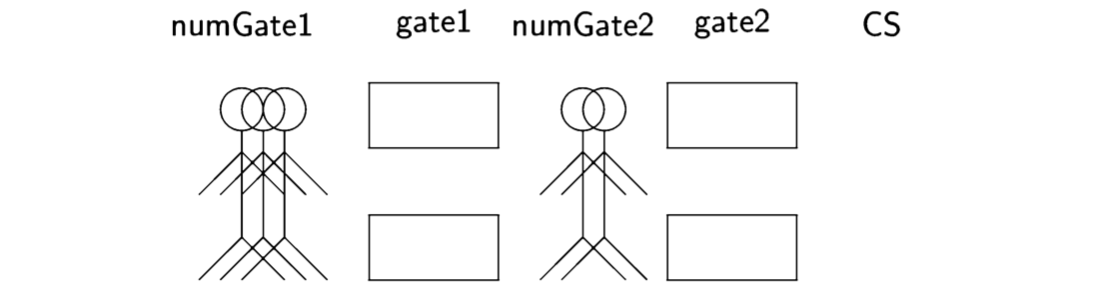

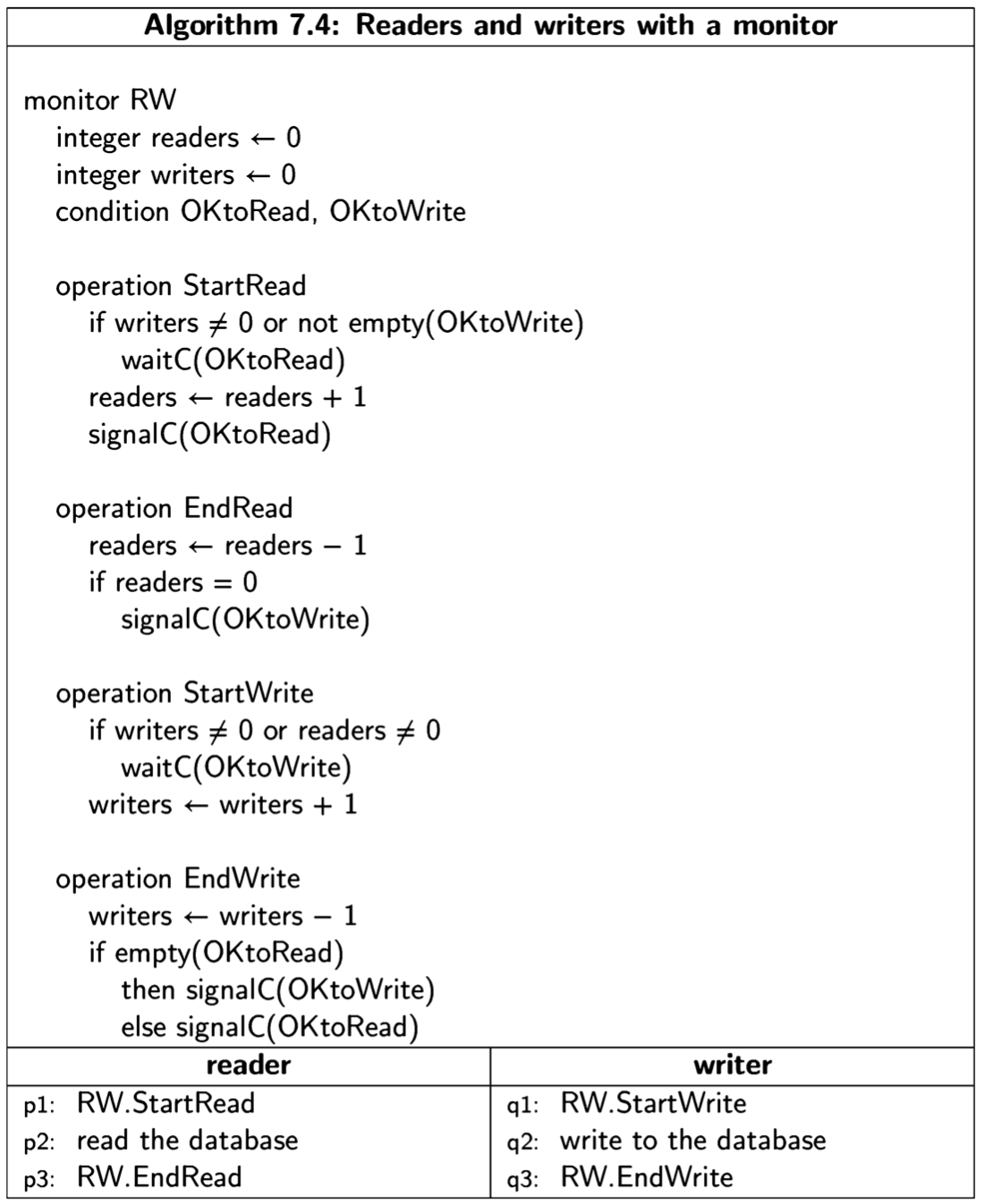

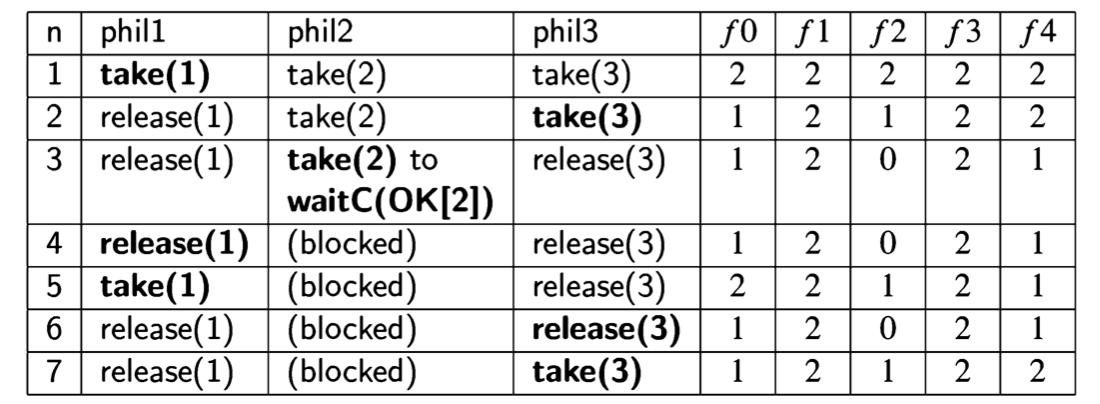

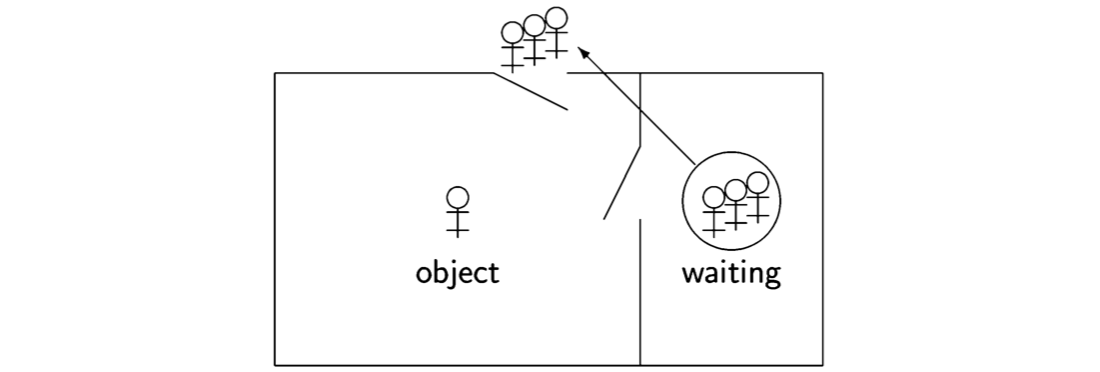

The following diagram will help visualize the critical section problem:

The stick figures represent processes and the box is a critical region in which at most one process at a time may be executing the statements that form its critical section. The solution to the problem is given by the protocols for opening and closing the door to the critical region in such a manner that the correctness properties are satisfied.

The critical section problem is intended to model a system that performs complex computation, but occasionally needs to access data or hardware that is shared by several processes. It is unreasonable to expect that individual processes will never terminate, or that nothing bad will ever occur in the programs of the system. For example, a check-in kiosk at an airport waits for a passenger to swipe his credit card or touch the screen. When that occurs, the program in the kiosk will access a central database of passengers, and it is important that only one of these programs update the database at atime. The critical section is intended to model this operation only, while the non-critical section models all the computation of the program except for the actual update of the database.

It would not make sense to require that the program actually participate in the

update of the database by programs in other kiosks, so we allow it to wait indefinitely

for input, and we take into account that the program could malfunction. In other

words, we do not require that the non-critical section progress. On the other hand,

we do require that the critical section progress so that it eventually terminates. The

reason is that the process executing a critical section typically holds a lock or

permission resource, and the lock or resource must eventually be released so that

other processes can enter their critical sections. The requirement for progress is

reasonable, because critical sections are usually very short and their progress can

be formally verified.

Obviously, deadlock (my computer froze up) must be avoided, because systems

are intended to provide a service. Even if there is local progress within the

protocols as the processes set and check the protocol variables, if no process ever

succeeds in making the transition from the preprotocol to the critical section, the

program is deadlocked.

Freedom from starvation is a strong requirement in that it must be shown that no possible execution sequence of the program, no matter how improbable, can cause starvation of any process. This requirement can be weakened; we will see one example in the algorithm of Section 5.4.

A good solution to the critical section problem will also be efficient, in the sense that the pre- and postprotocols will use as little time and memory as possible. In particular, if only a single process wishes to enter its critical section it will succeed in doing so almost immediately.

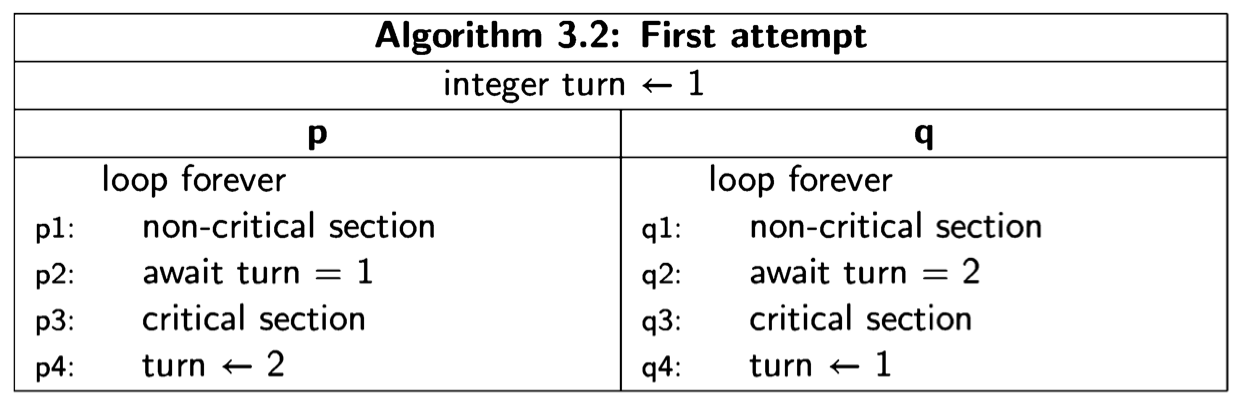

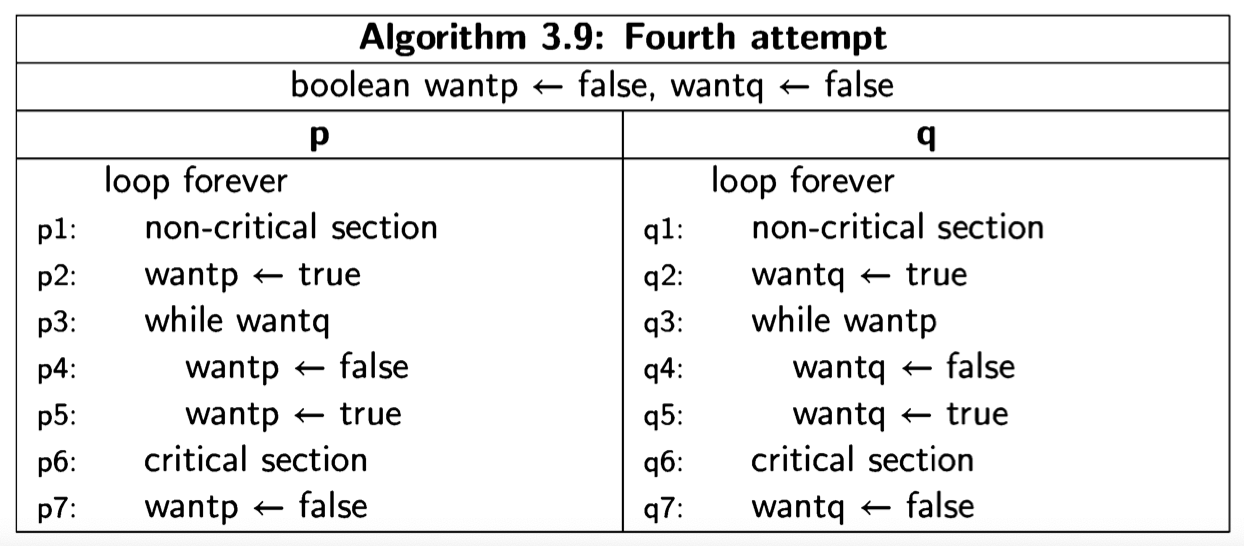

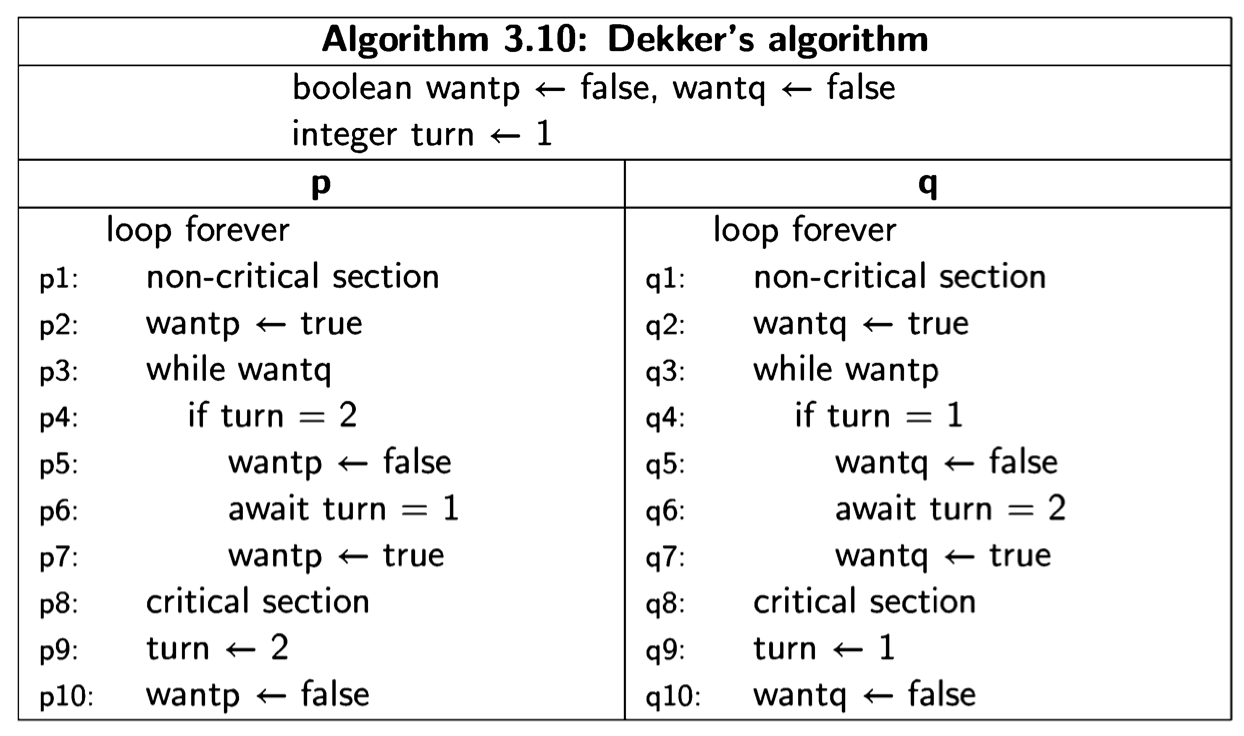

First attempt

Here is a first attempt at solving the critical section problem for two processes:

The statement await turn=1 is an implementation-independent notation for a statement that waits until the condition turn=1 becomes true. This can be implemented (albeit inefficiently) by a busy-wait loop that does nothing until the condition is true.

The global variable turn can take the two values 1 and 2, and is initially set to the arbitrary value 1. The intended meaning of the variable is that it indicates whose “turn” it is to enter the critical section. A process wishing to enter the critical section will execute a preprotocol consisting of a statement that waits until the value of turn indicates that its turn has arrived. Upon exiting the critical section, the process sets the value of turn to the number of the other process.

We want to prove that this algorithm satisfies the three properties required of a solution to the critical section problem. To that end we first explain the construction of state diagrams for concurrent programs, a concept that was introduced in Section 2.2.

Proving correctness with state diagrams

States

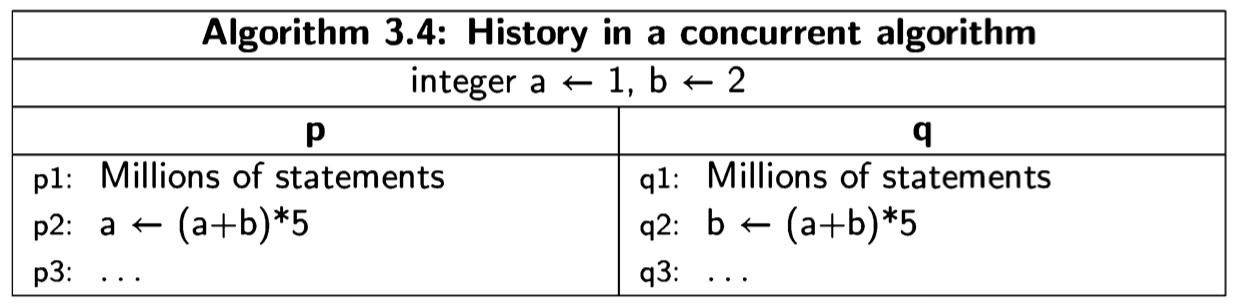

You do not need to know the history of the execution of an algorithm in order to predict the result of the next step to be executed. Let us first examine this claim for sequential algorithms and then consider how it has to be modified for concurrent algorithms. Consider the following algorithm:

Suppose now that the computation has reached statement p2, and the values of a and b are 10 and 20, respectively. You can now predict with absolute certainty that as a result of the execution of statement p2, the value of a will become 150, while the value of b will remain 20; furthermore, the control pointer of the computer will now contain p3. The history of the computation how exactly it got to statement p2 with those values of the variables is irrelevant.

For a concurrent algorithm, the situation is similar:

Suppose that the execution has reached the point where the first process has reached statement p2 and the second has reached q2, and the values of a and b are again 10 and 20, respectively. Because of the interleaving, we cannot predict whether the next statement to be executed will come from process p or from process q; but we can predict that it will be from either one or the other, and we can specify what the outcome will be in either case.

In the sequential Algorithm 3.3, states are triples such as \(s_i = (p2, 10,20)\). From the semantics of assignment statements, we can predict that executing the statement p2 in state \(s_i\) will cause the state of the computation to change to \(s_{i+1} = (p3, 150, 20)\). Thus, given the initial state of the computation \(s_0 = (p1, 1, 2)\), we can predict the result of the computation. (If there are input statements, the values placed into the input variables are also part of the state.) It is precisely this property that makes debugging practical: if you find an error, you can set a breakpoint and restart a computation in the same initial state, confident that the state of the computation at the breakpoint is the same as it was in the erroneous computation.

In the concurrent Algorithm 3.4, states are quadruples such as \(s*i = (p2, q2, 10, 20)\). We cannot predict what the next state will be, but we can be sure that it is either \(s^{p}_{i+1} = (p3, q2, 150, 20) \) or \(s^{q}_{i+1} = (p2, q3, 10, 150) \) depending on whether the next state taken is from process p or process q, respectively. While we cannot predict which states will appear in any particular execution of a concurrent algorithm, the set of reachable states (Definition 2.5) are the only states that can appear in any computation. In the example, starting from state s;, the next state will be an element of the set \(\{s^{p}_{i+1},s^{q}_{i+1}\}\). From each of these states, there are possibly two new states that can be reached, and so on. To check correctness properties, it is only necessary to examine the set of reachable states and the transitions among them; these are represented in the state diagram.

For the first attempt, Algorithm 3.2, states are triples of the form \((p_i,q_j, turn)\), where turn denotes the value of the variable turn. Remember that we are assuming that any variables used in the critical and non-critical sections are distinct from the variables used in the protocols and so cannot affect the correctness of the solution. Therefore, we leave them out of the description of the state. The mutual exclusion correctness property holds if the set of all accessible states does not contain a state of the form (p3, q3, turn) for some value of turn, because p3 and q3 are the labels of the critical sections.

State diagrams

How many states can there be in a state diagram? Suppose that the algorithm has N processes with \(n_i\) statements in process i, and M variables where variable j has \(m_j\) possible values. The number of possible states is the number of tuples that can be formed from these values, and we can choose each element of the tuple independently, so the total number of states is \(n_1\ x \dots x\ n_N x\ m_1\dots x\ m_M\). For the first attempt, the number of states is \(n_1\ x\ n_2\ x\ m_1 = 4\ x\ 4\ x\ 2 = 32\), since it is clear from the text of the algorithm that the variable turn can only have two values, 1 and 2. In general, variables may have as many values as their representation can hold, for example, \(2^{32}\) for a 32-bit integer variable.

However, it is possible that not all states can actually occur, that is, it is possible that some states do not appear in any scenario starting from the initial state \(s_0 = (p1, q1, 1)\). In fact, we hope so! We hope that the states \((p3, q3, 1)\) and \((p3, q3, 2)\), which violate the correctness requirement of mutual exclusion, are not accessible from the initial state.

To prove a property of a concurrent algorithm, we construct a state diagram and then analyze if the property holds in the diagram. The diagram is constructed in- crementally, starting with the initial state and considering what the potential next states are. If a potential next state has already been constructed, then we can con- nect to it, thus obtaining a finite presentation of an unbounded execution of the algorithm. By the nature of the incremental construction, turn will only have the values 1 and 2, because these are the only values that are ever assigned by the algorithm.

The following diagram shows the first four steps of the incremental construction of the state diagram for Algorithm 3.2:

The initial state is \((p1,q1, 1)\). If we execute pl from process p, the next state is \((p2,q1, 1)\): the first element of the tuple changes because we executed a statement of the first process, the second element does not change because we did not execute a statement of that process, and the third element does not change since by assumption the non critical section does not change the value of the variable turn. If, however, we execute ql from process q, the next state is \((p1, q2, 1)\). From this state, if we try to execute another statement, q2, from process q, we remain in the same state. The statement is await turn=2, but turn = 1. We do not draw another instance of \((p1, q2, 1)\); instead, the arrow representing the transition points to the existing state.

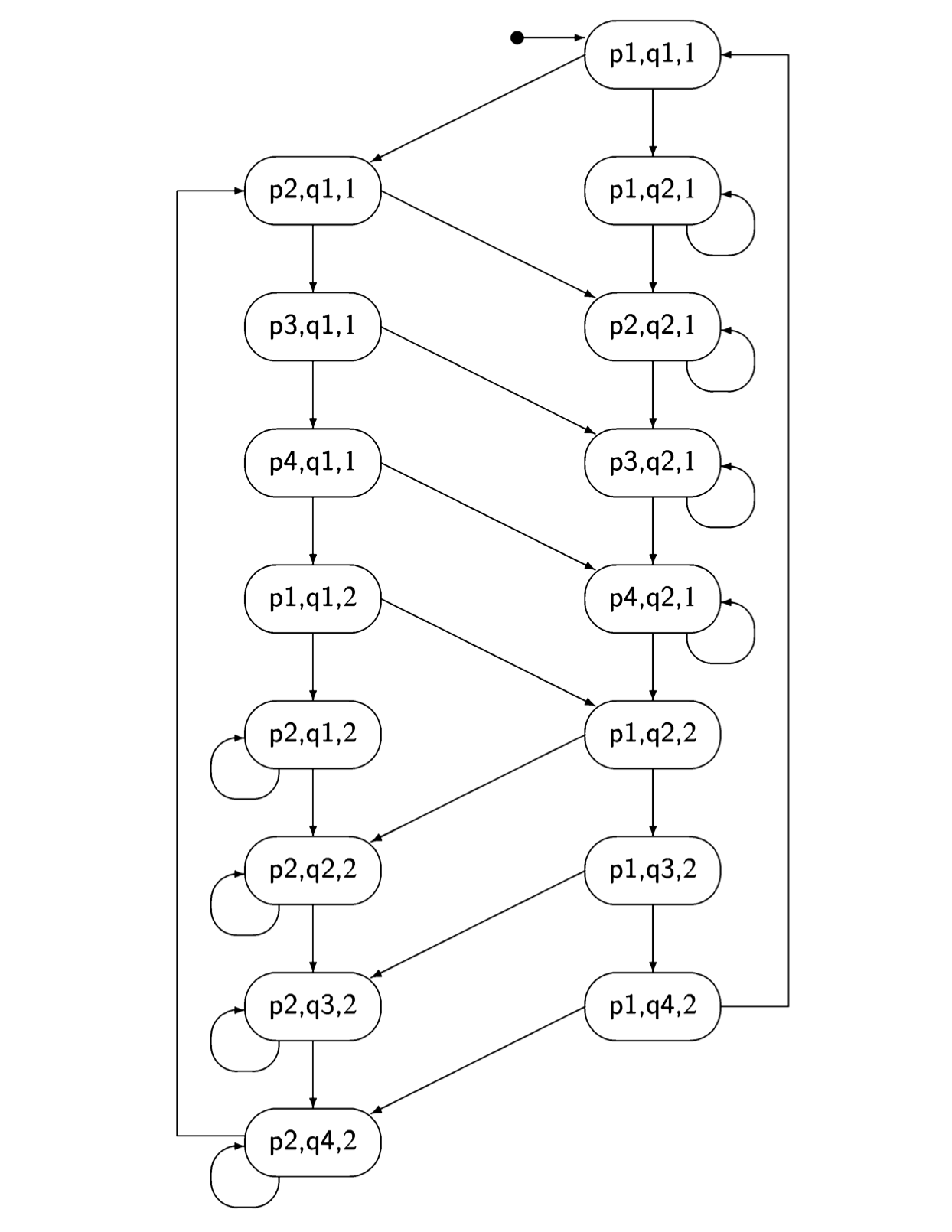

The incremental construction terminates after 16 of the 32 possible states have been constructed, as shown in Figure 3.1. You may (or may not!) want to check if the construction has been carried out correctly.

A quick check shows that neither of the states \((p3, q3, 1)\) nor \((p3, q3, 2)\) occurs; thus we have proved that the mutual exclusion property holds for the first attempt.

Abbreviating the state diagram

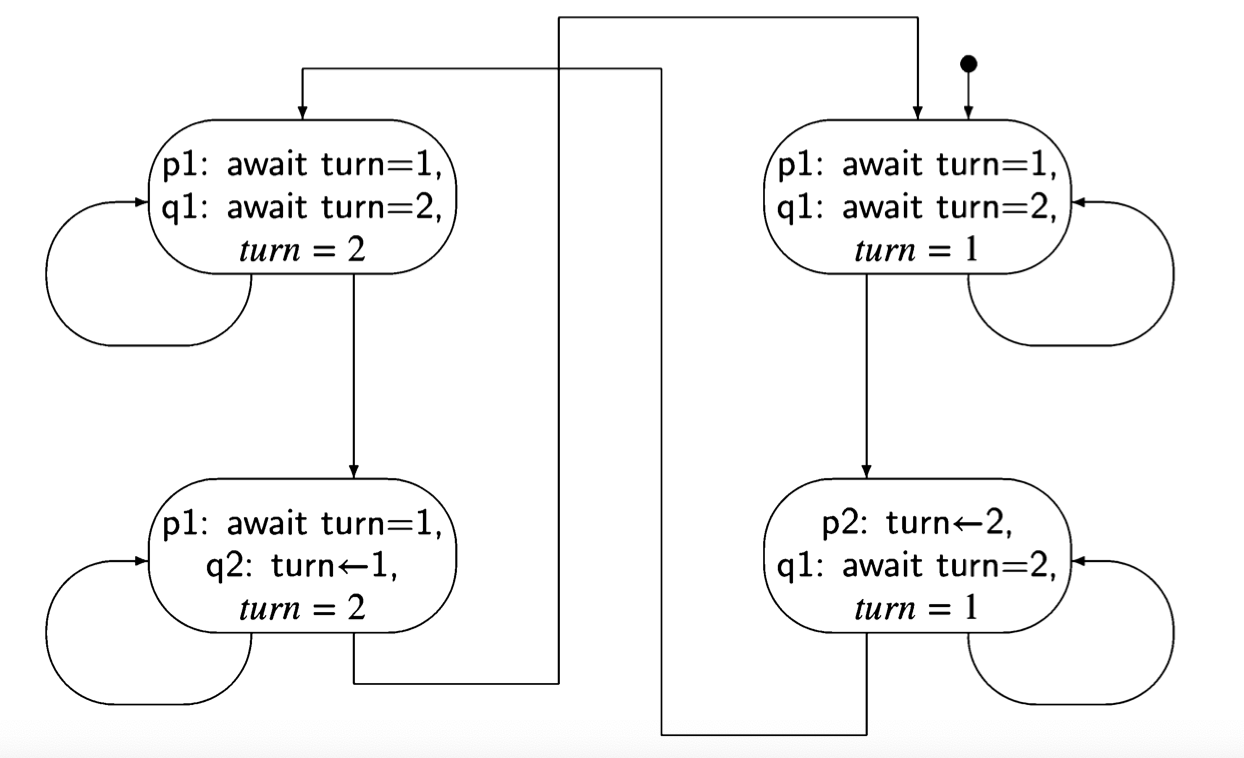

Clearly, the state diagram of the simple algorithm in the first attempt is unwieldy. When state diagrams are built, it is important to minimize the number of states that must be constructed. In this case, we can reduce the number of accessible states from 16 to 4, by reducing the algorithm to the one shown below, where we have removed the two statements for the critical and non-critical sections.

Admittedly, this is suspicious. The whole point of the algorithm is to ensure that mutual exclusion holds during the execution of the critical sections, so how can we simply erase these statements? The answer is that whatever statements are executed by the critical section are totally irrelevant to the correctness of the synchronization algorithm.

If in the first attempt, we replace the statement p3: critical section by the com-

ment p3: // critical section, the specification of the correctness properties remain

unchanged, for example, we must show that we cannot be in either of the states

\((p3, q3, 1)\) or \((p3, q3,2)\). But if we are at p3 and that is a comment, it is just as

if we were at p4, so we can remove the comment; similar reasoning holds for p1.

In the abbreviated first attempt, the critical sections have been swallowed up by

\(turn \leftarrow 2\) and \(turn \leftarrow 1\), and the non-critical sections have been swallowed up

by await \(turn=1\) and await \(turn=2\). The state diagram for this algorithm is shown

in Figure 3.2, where for clarity we have given the actual statements and variables

names instead of just labels.

Correctness of the first attempt

We are now in a position to try to prove the correctness of the first attempt. As noted above, the proof that mutual exclusion holds is immediate from an examination of the state diagram.

Next we have to prove that the algorithm is free from deadlock. Recall that this means that if some processes are trying to enter their critical section then one of them must eventually succeed. In the abbreviated algorithm, a process is trying to

enter its critical section if it is trying to execute its await statement. We have to check this for the four states; since the two left states are symmetrical with the two right states, it suffices to check one of the pairs.

Consider the upper left state (await turn=1, await turn=2, turn = 2). Both pro- cesses are trying to execute their critical sections; if process q tries to execute await turn=2, it will succeed and enter its critical section.

Consider now the lower left state (await \(turn=1\), \(turn \leftarrow 1\), \(turn = 2\)). Process p may try to execute await turn=1, but since \(turn = 2\), it does not change the state. By the assumption of progress on the critical section and the assignment statement, process q will eventually execute \(turn \leftarrow 1\), leading to the upper right state. Now, process p can enter its critical section. Therefore, the property of freedom from deadlock is satisfied.

Finally, we have to check that the algorithm is free from starvation, meaning that if any process is about to execute its preprotocol (thereby indicating its intention to enter its critical section), then eventually it will succeed in entering its critical section. The problem will be easier to understand if we consider the state diagram for the unabbreviated algorithm; the appropriate fragment of the diagram is shown in Figure 3.3, where NCS denotes the non-critical section. Consider the state at the lower left. Process p is trying to enter its critical section by executing p2: await \(turn=1\), but since \(turn = 2\), the process will loop indefinitely in its await statement until process q executes q4: turn<1. But process q is at q1: NCS and there is No assumption of progress for non-critical sections. Therefore, starvation has occurred: process p is continually checking the value of turn trying to enter its critical section, but process q need never leave its non-critical section, which is necessary if p is to enter its critical section.

Informally, turn serves as a permission resource to enter the critical section, with its value indicating which process holds the resource. There is always some process holding the permission resource, so some process can always enter the critical section, ensuring that there is no deadlock. However, if the process holding the permission resource remains indefinitely in its non-critical section—as allowed by the assumptions of the critical section problem—the other process will never receive the resource and will never enter its critical section. In our next attempt, we will ensure that a process in its non-critical section cannot prevent another one from entering its critical section.

Second attempt

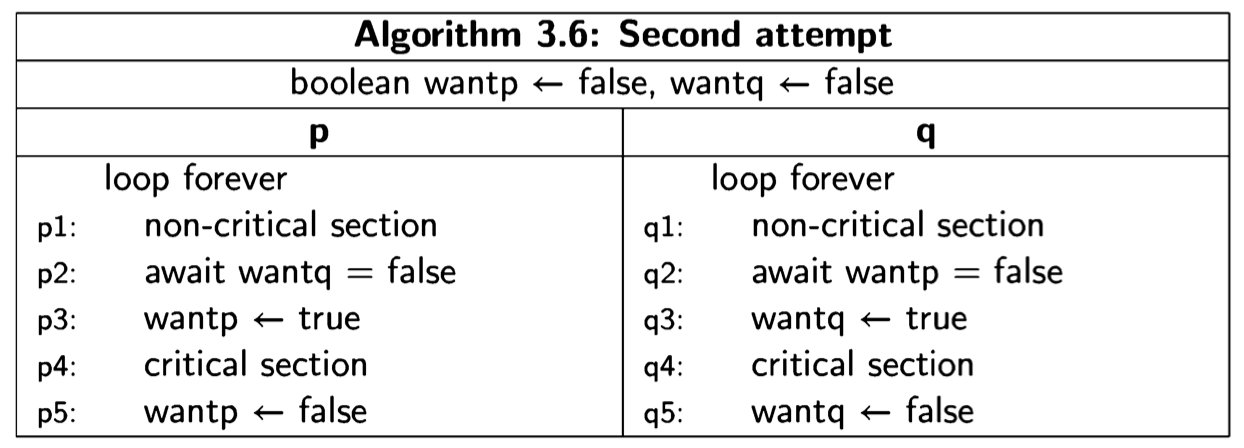

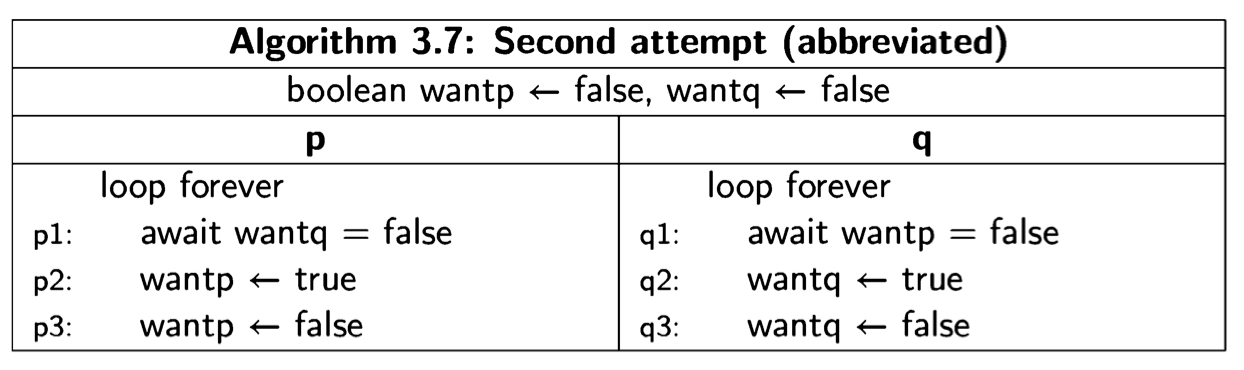

The first attempt was incorrect because both processes set and tested a single global variable. If one process dies, the other is blocked. Let us try to solve the critical section problem by providing each process with its own variable (Algorithm 3.6). The intended interpretation of the variables is that wanti is true from the step where process i wants to enter its critical section until it leaves the critical section. await statements ensure that a process does not enter its critical section while the other process has its flag set. This solution does not suffer from the problem of the

previous one: if a process halts in its non-critical section, the value of its variable want will remain false and the other process will always succeed in immediately entering its critical section.

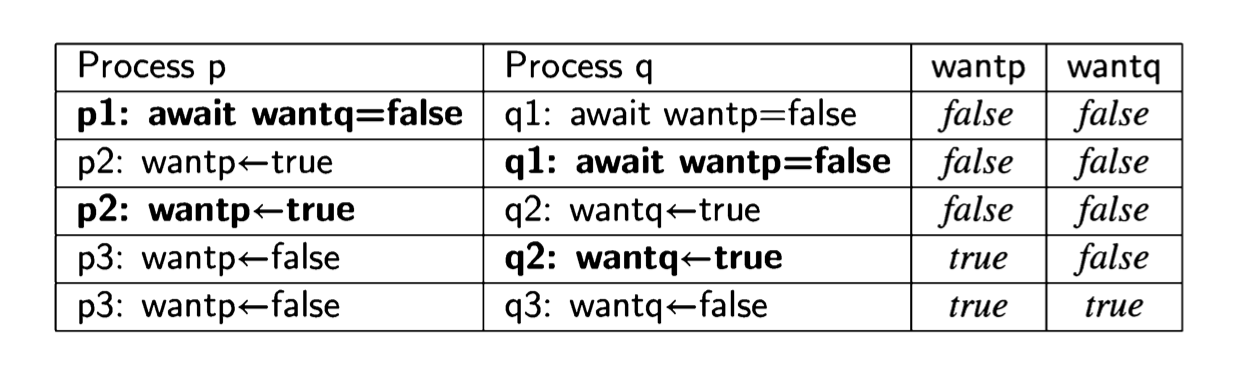

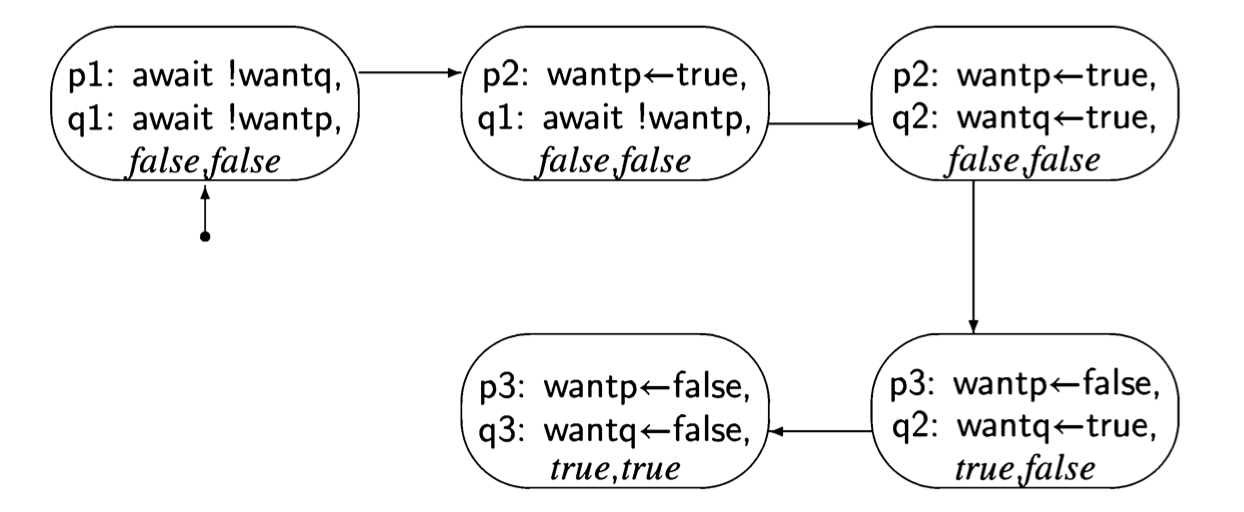

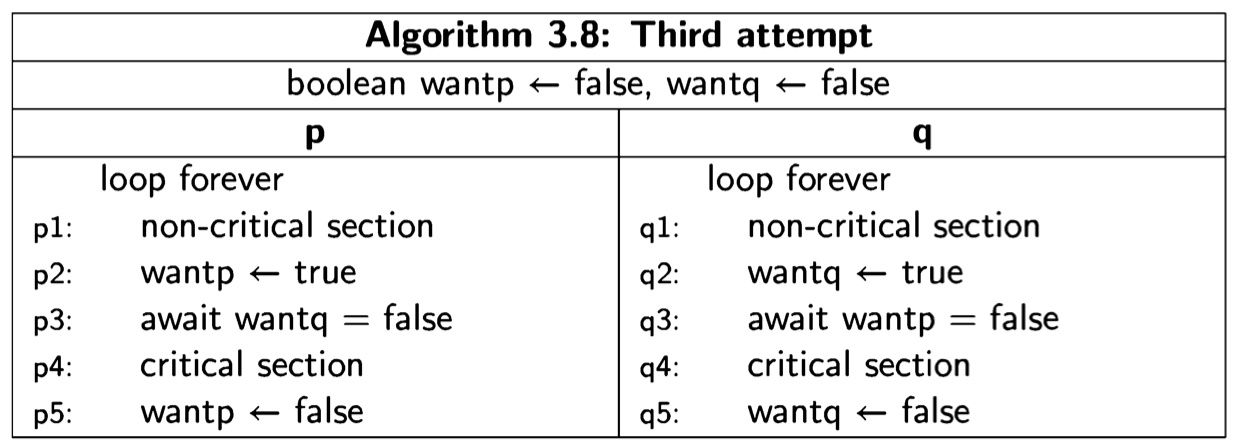

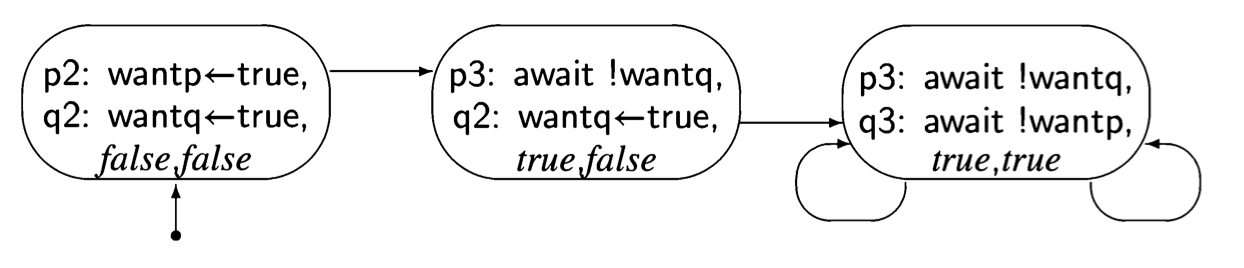

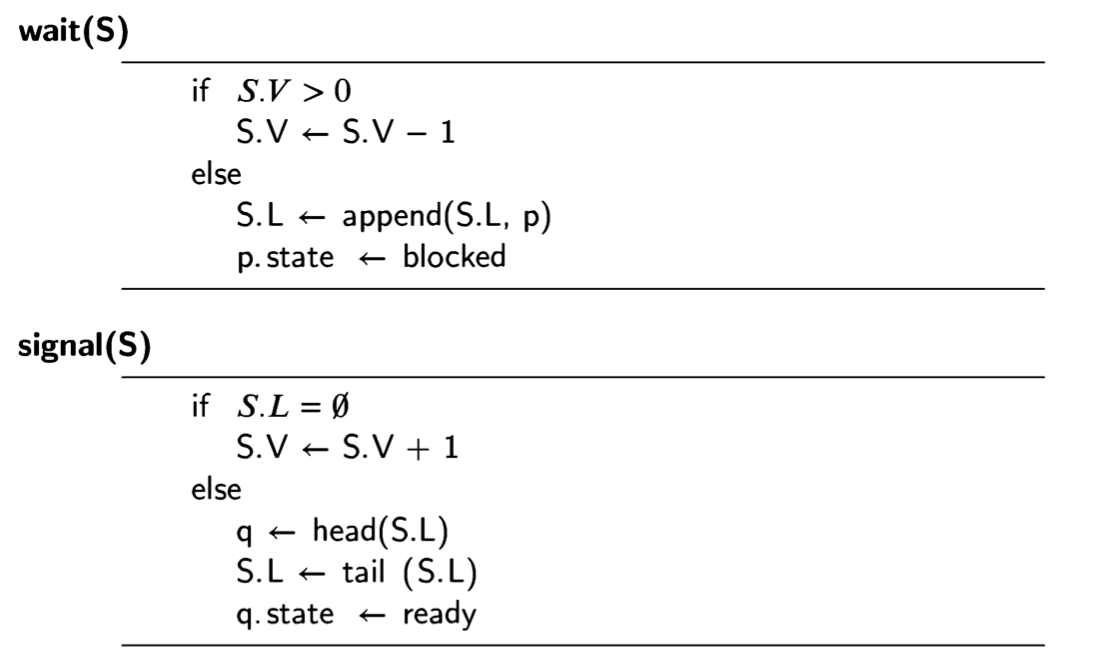

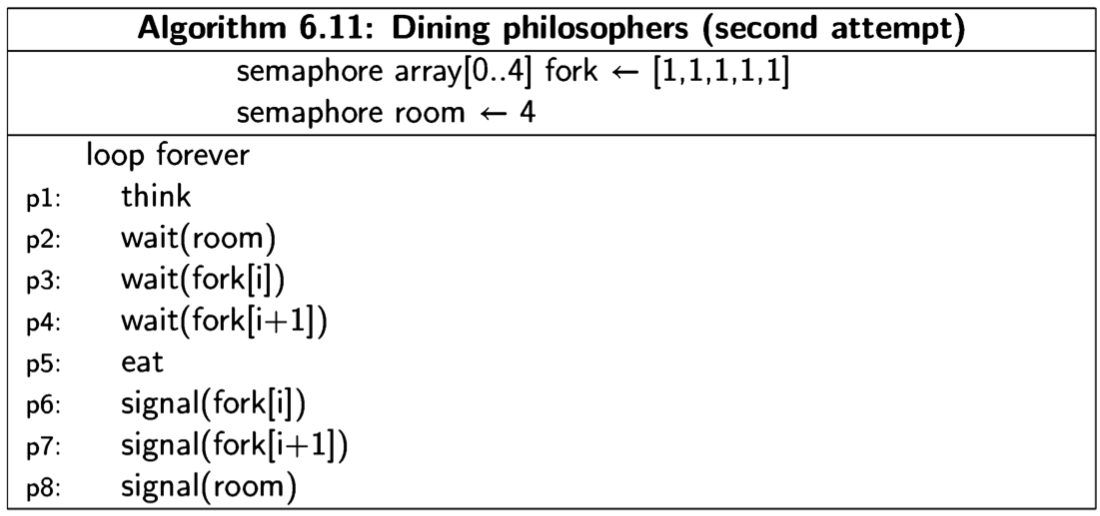

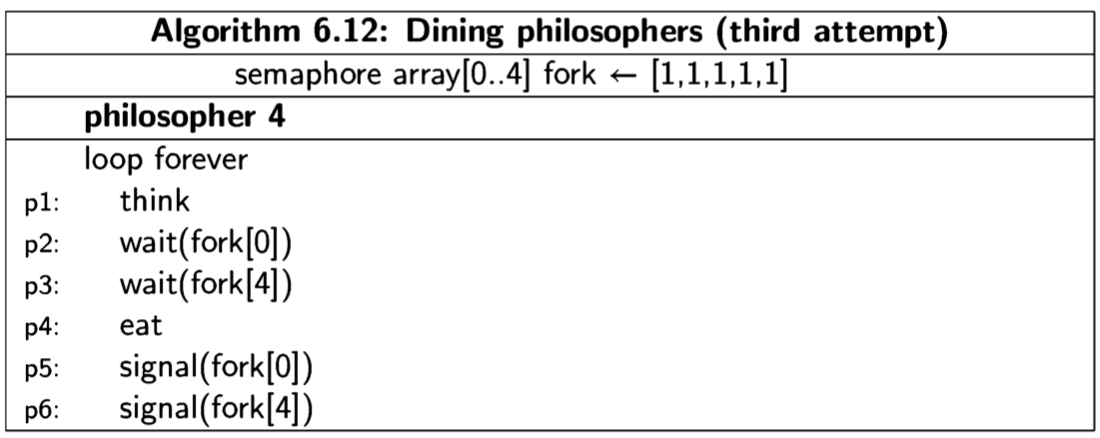

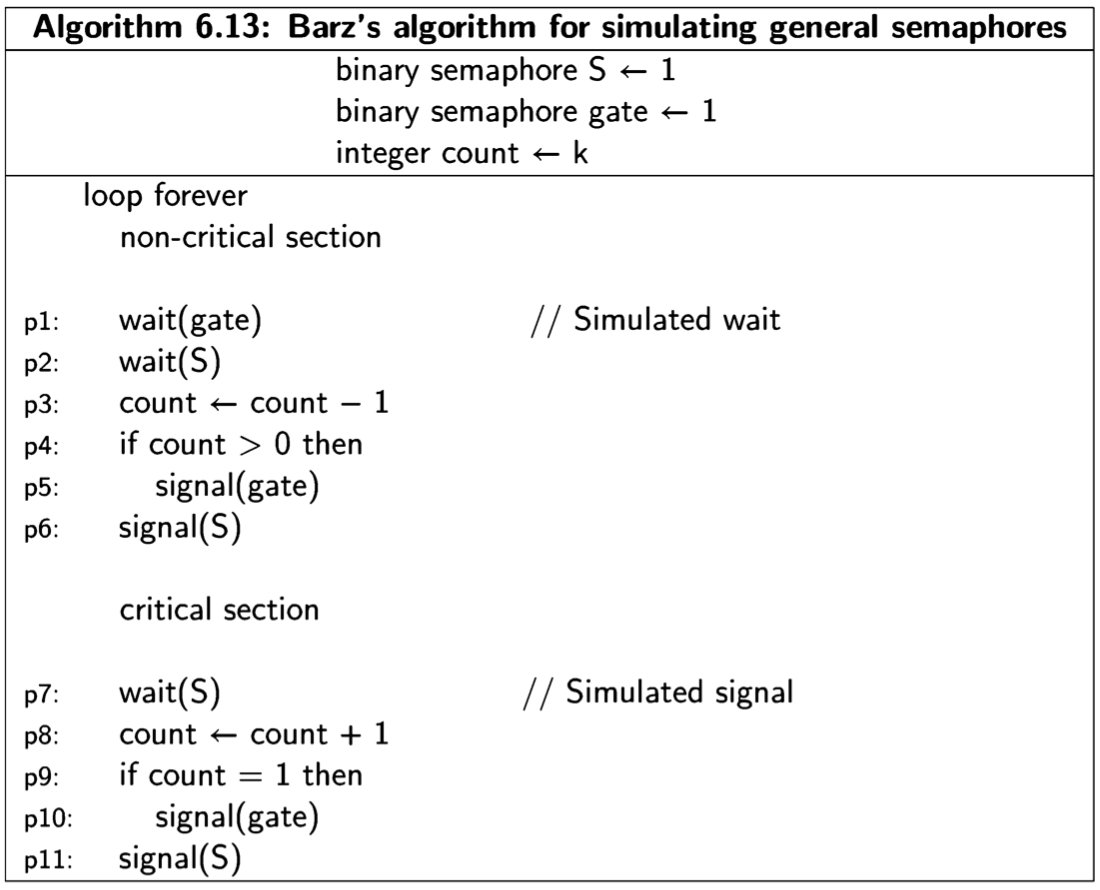

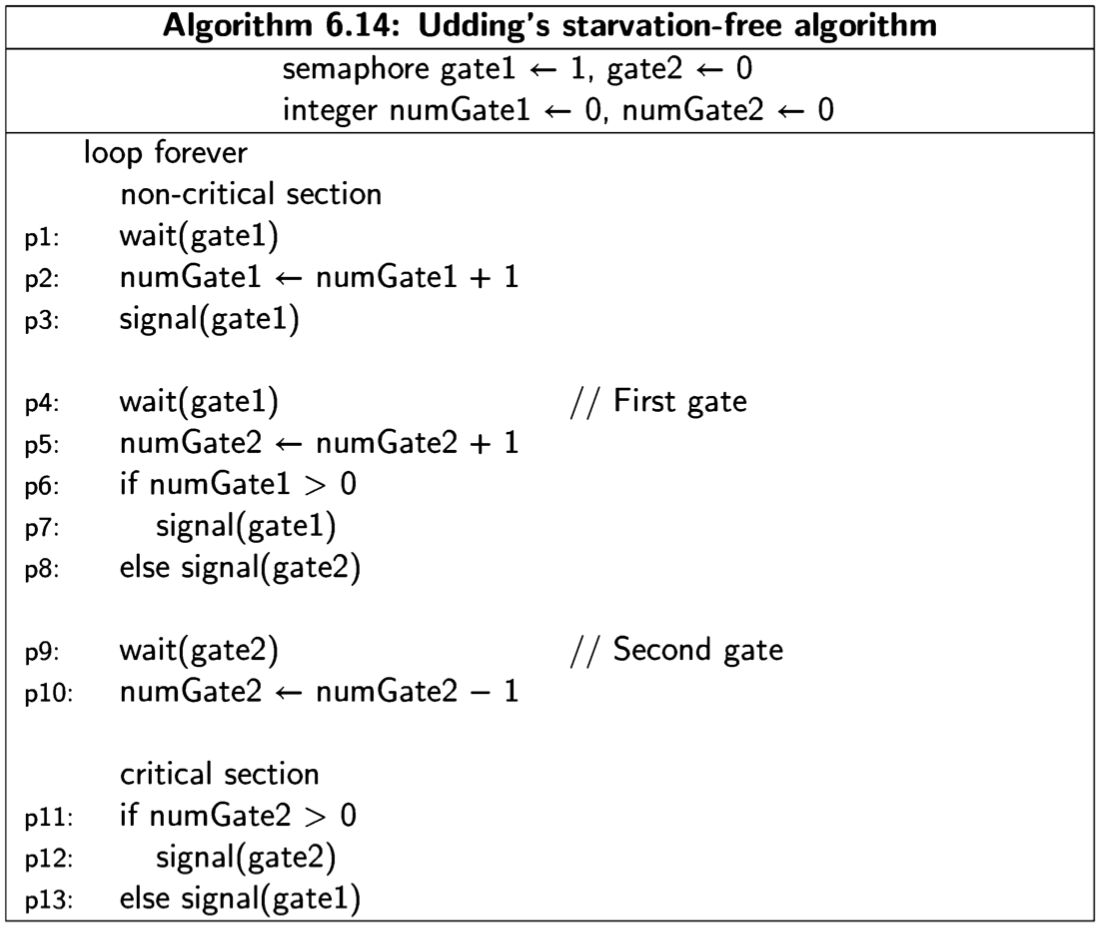

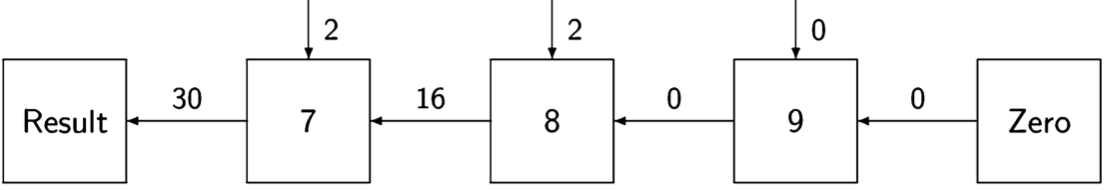

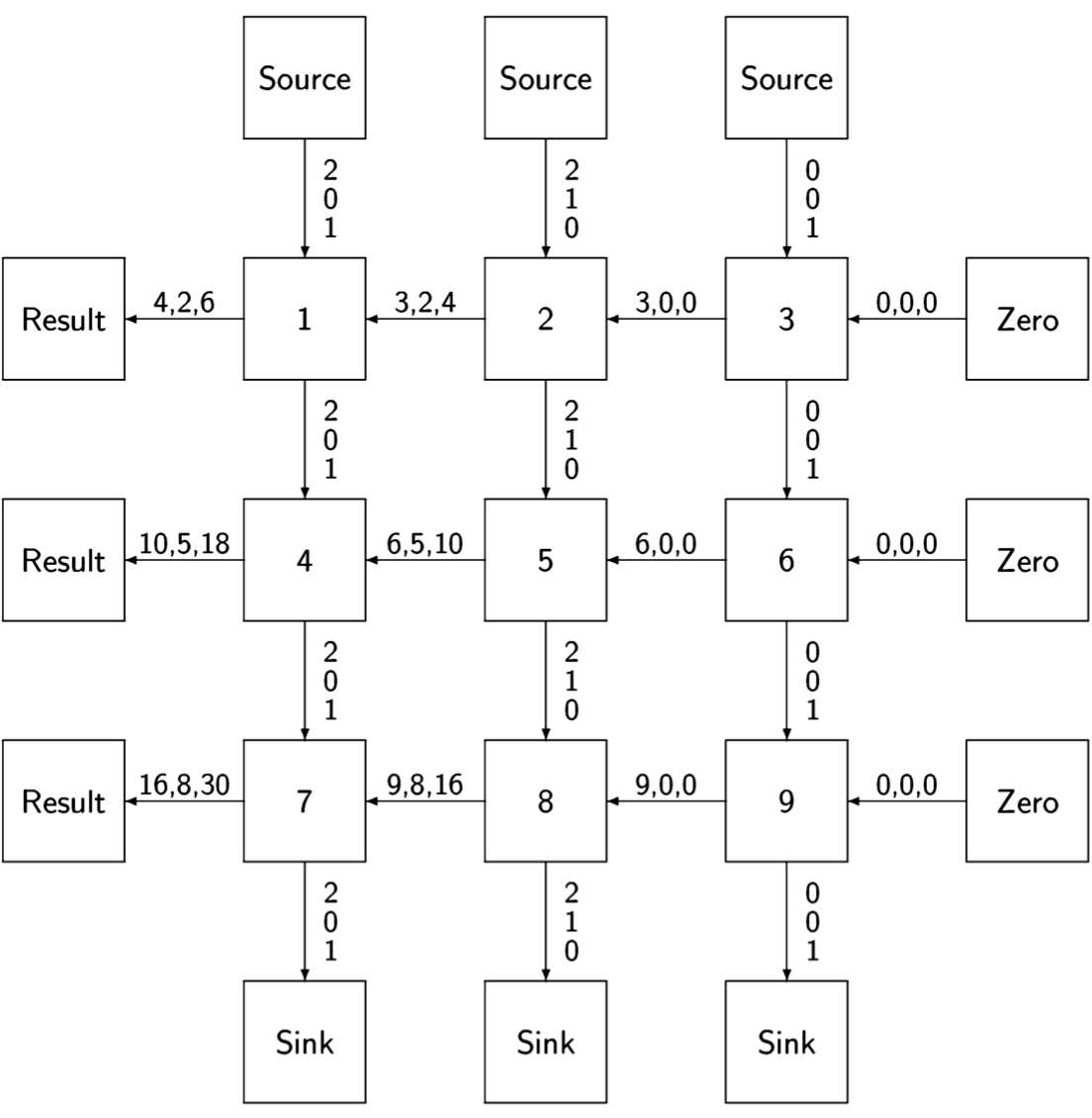

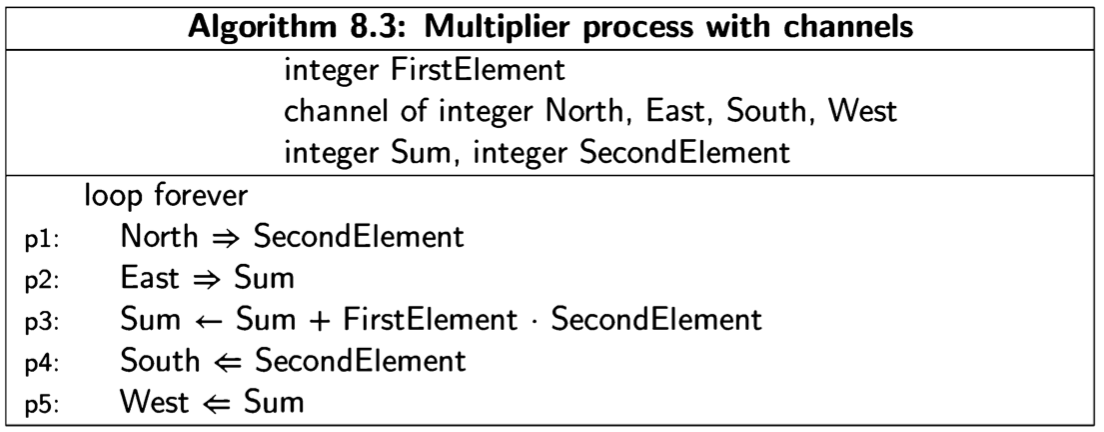

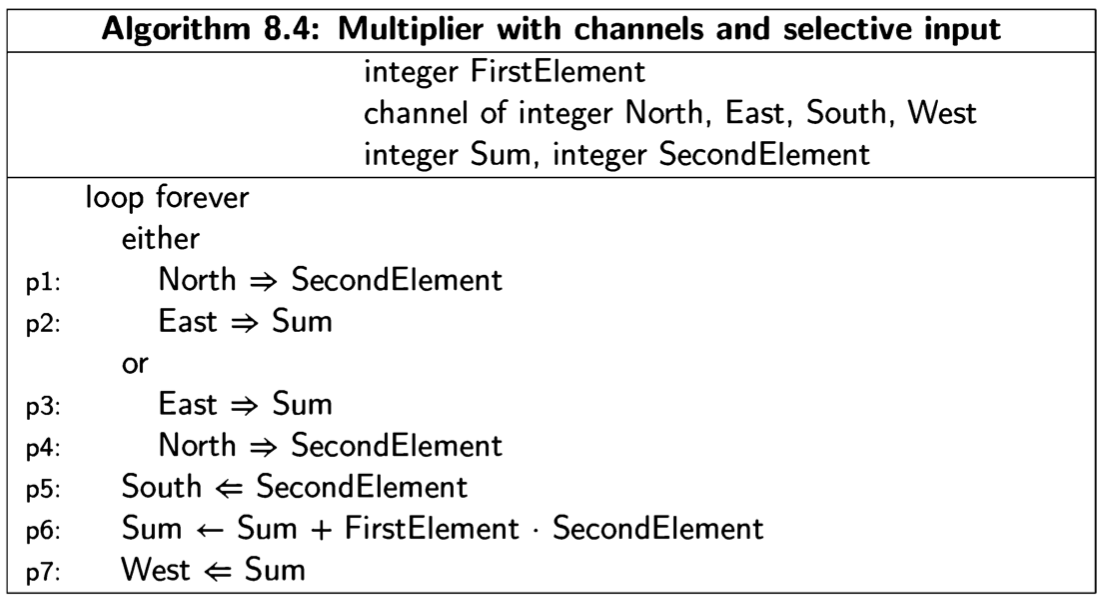

Let us construct a state diagram for the abbreviated algorithm: